Project Seminar (IGR205) IGR / IGD Master Track

In this course, 3 students will team up, and each team will develop a program or a set of modules, which is possibly related to a (or multiple) research article(s). The project topics are carefully proposed by the entire teaching staff in the IGR track. Each team will exclusively work for one topic.

Important: The participants have to keep in mind that all the topics will not have the same difficulty. Thus, each team should actively communicate with the project supervisor(s). It may happen to adjust the given tasks depending on your capacity. But, don't worry, your supervisor(s) will be happy to help you. Once you have been assigned to a topic, please contact your supervisor(s) as quickly as possible and get clearly informed about your tasks and supervision. Additionally, you are working in a team, and, sometimes (or even quite often), it can happen that all team members are not equally contributing. Please be aware that your evaluation will be first based on your teamwork; but, you still have your right to report to your supervisor and the course organizer on unfairness if you experience unbearable collaboration with someone. Please actively communicate within your team and make an effort to build good teamwork.

Schedule

Time: 13:30-16:45

- Preparation

- 01 Apr 2021: Topics are available

- 15 Apr 2021: Deadline for topic registration

- 16 Apr 2021: Announcement of topic assignment

- Semester Period

- 19 Apr 2021: Kickoff lecture

- 31 May 2021: Midterm presentation

- 24 Jun 2021: Final presentation

- 24 Jun 2021, 23:59: Deadline for submission of final materials

Evaluation

At the end of the course, after the final presentation, each team has to send both the supervisor and the organizers (Kiwon and Jan) one zip file that contains (1) a presentation PDF file and (2) a report PDF file.

The evaluation will take into account the followings:

- Implementation (50%): Program or modules for the given topic

- Presentation (30%): Final 20 minutes talk (15 minutes for contents and 5 minutes for Q/A)

- Report (20%): Maximum 4 pages in double column excluding references; you can use this LaTeX template or the original template of ACM TOG.

- Guideline: You can start with writing a summary of the topic you had worked for. Additionally, it is stronly recommended that you focus more on your own study/research rather than just finishing with the summary of the corresponding research paper. We, including you, are not interested in repeating the text already described in the original work; it is more meaningful that you make an effort to put your own achievement and analysis about the work, such as pros and cons, limitation, your own ideas for the issues, potential future work, etc.

We value academic integrity; thus, you must understand the meaning and consequences of plagiarism. You can refer to existing codes, texts, and any materials that would be useful for your project. However, you must be careful to reuse them properly.

Topics

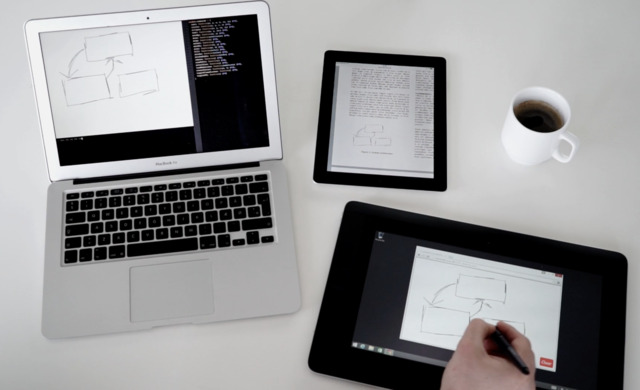

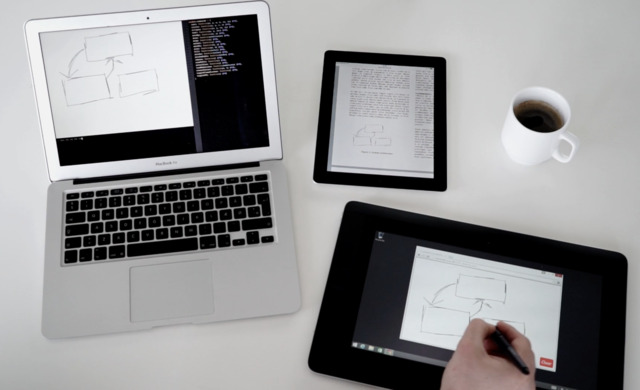

The goal of this project is to create a new presentation tool based on Webstrates. This tool should explore ways to satisfy the different needs of these different user populations in the context of a single presentation document. It should go beyond presenter notes (intended to be read by the presenter) to include audience notes (intended to be read by the audience) and text-centric readings. Finally, it should take advantage of the dynamic and interactive capabilities of the web, allowing for integrating different web applications directly into the presentation support. For example, in a programming class, it slides should support interactively editing code examples, or for letting students dynamically add content to the presentation in a structured way. The minimal solution will provide an implementation that satisfies the above goals, but this is an open-ended project: there is plenty of room for creativity in orienting the design and exploring different ways to satisfy these goals. Each group will meet weekly with the project supervisor to collaboratively orient the direction of the project.

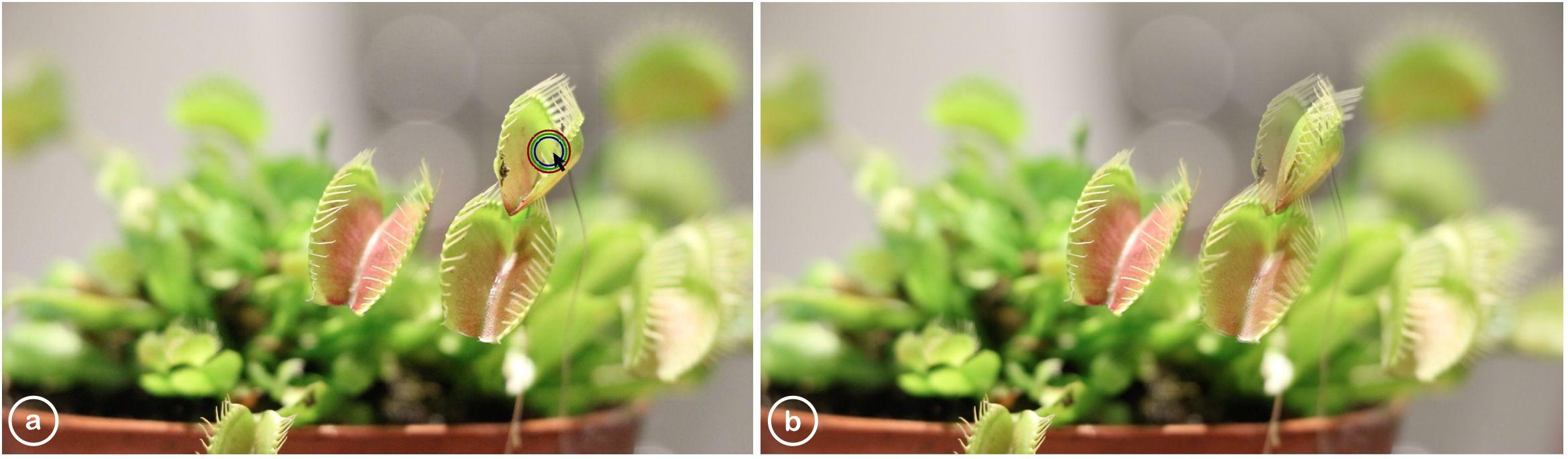

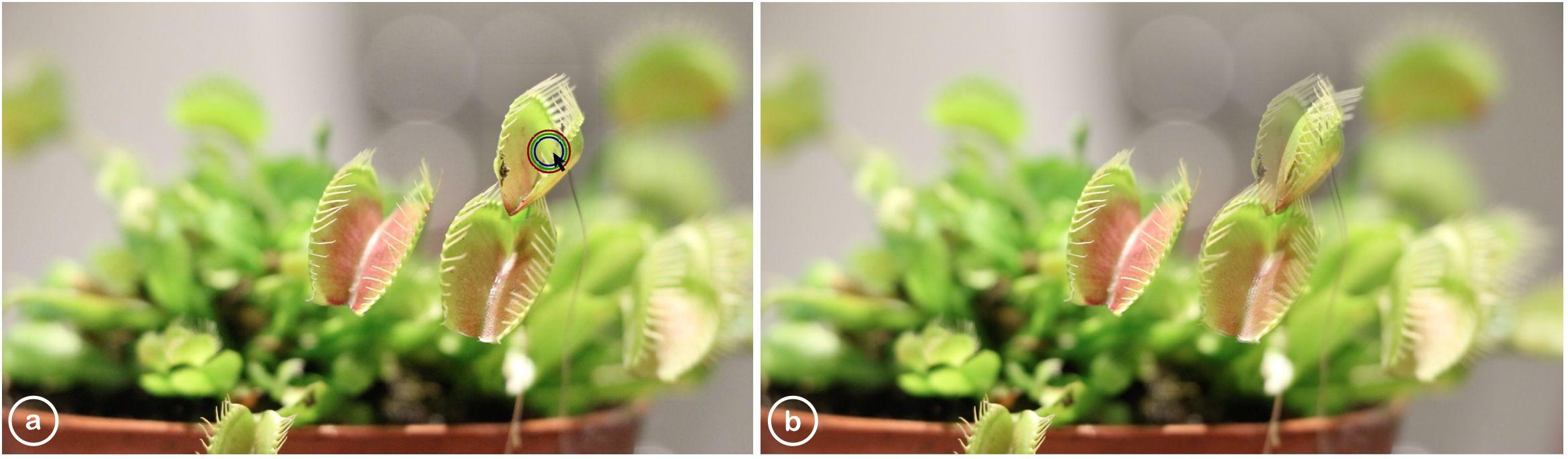

The goal of this project is to interface the capabilities of a living plant with a digital function to establish bidirectional input-output. We will specifically look at leaf movements of a Mimosa Pudica or a Venus Flytrap plant, and connect them with a custom software to prepare a bioactuation interface. Three students are able to work on this project to prepare an initial biohybrid circuit. Students are then encouraged to think outside the box for research applications of such an interface. What if there one plant movement triggers another plant movement at a different location? What actions/notifications on phones or routine activities in home are suitable for plant bioactuation? The students will then implement this application showcasing specific scenarios that leverages and showcases this novel type of interaction. Each group is expected to meet once a week with their supervisor and discuss their ideas and the direction of the project. The students will be provided with the relevant living plants and initial circuitry for plant actuation.

Attention: The first half of the project, Prof. Sareen will be supervising the group remotely from New York and later in the year (depending on the COVID situation) physical meetings will be possible. The students will get support by Jan Gugenheimer on issues that need to be addressed in person.

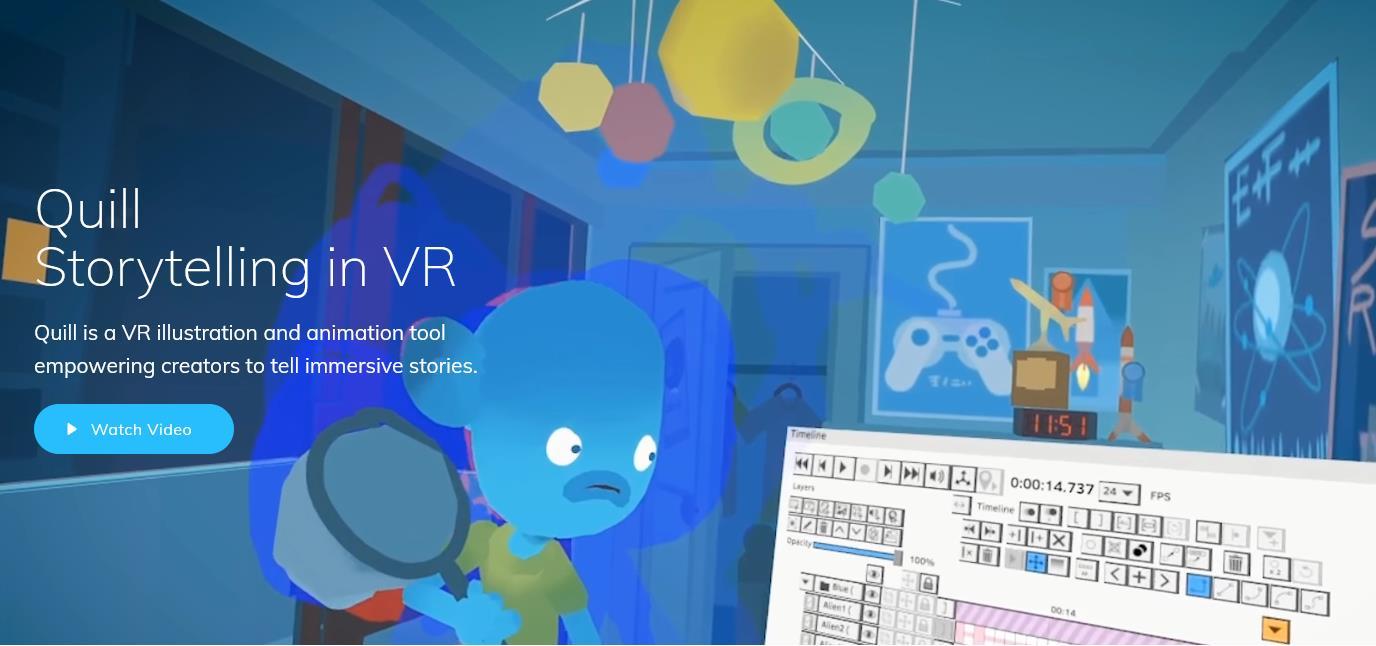

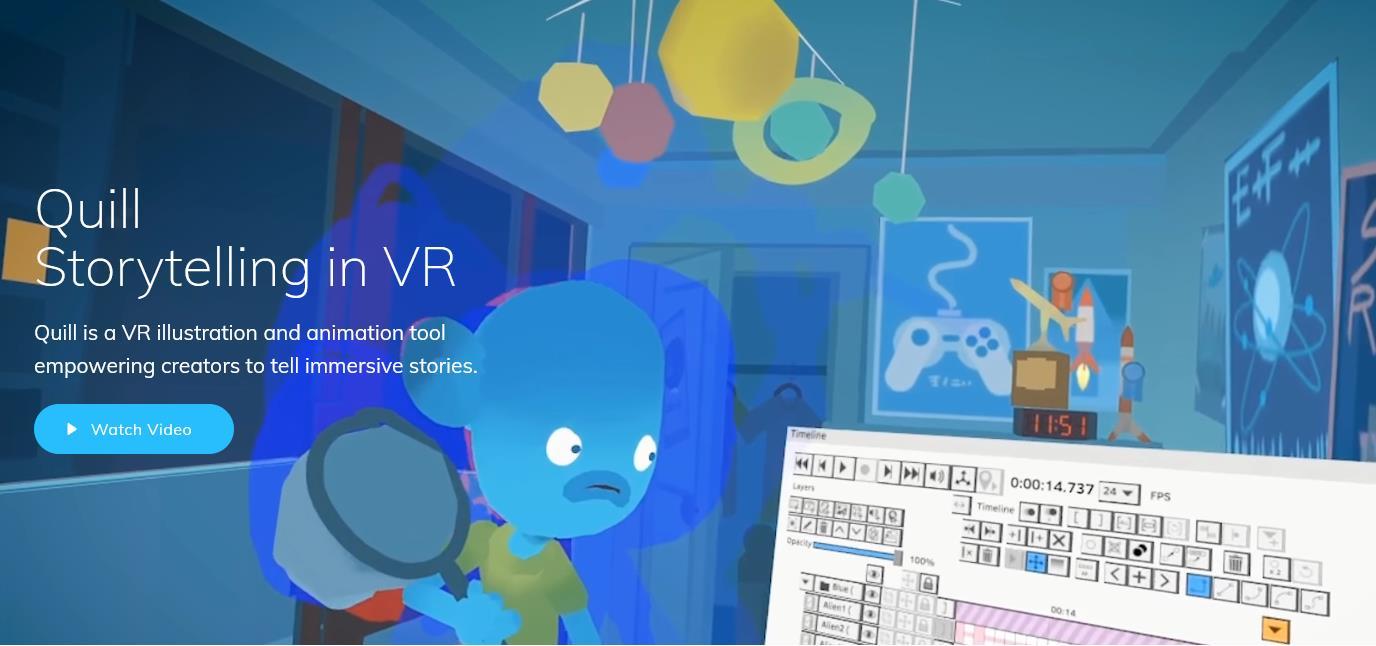

The goal of this project is to design and implement interaction and interface around creating and sharing contents on the VR platform, which can be one of the future forms of social media. Students are encouraged to think outside the box and forget traditional interface of social media (e.g. what if we could share a clip of VR experience, or what if we would record a series of motions in a VR game?). In a first step, each group will receive one Oculus Quest and will design and develop their novel form of social media in VR. In a second step, the students will implement their idea using Unity3D and the Oculus SDK. The last step, consists of implementing an application (e.g. a simple game) that leverages and showcases this novel type of interaction. Each group is expected to meet once a week with their supervisor and discuss their ideas and the direction of the project. Each student will get an Oculus Quest to be able to develop individually. Due to the complex implementation requirement students should have some prior knowledge in C# and ideally Unity development.

The goal of this project is to design and implement a virtual environment in which the user is playing a game through actions that correspond to a chore in the real world. Students will have to learn the skills necessary to implement a virtually aligned environment and follow a creative process to design and implement a simple game application in VR. In a first step, each student will receive one Oculus Quest and will have to follow Unity 3D tutorials to learn how to program and deploy a first VR application. In a second step, the students will together implement an application that is able to align the virtual and physical world and spawn simple geometry (e.g., cubes and circles) that can be used to approximate the user’s individual environment. In a last step, each student will implement a short individual game that leverages the aligned world to mask a physical chore (e.g., cleaning the room, hoovering, wiping surfaces, watering the plants). Each group is expected to meet once a week with their supervisor and discuss their ideas and the direction of the project. Each student will get an Oculus Quest to be able to develop individually.

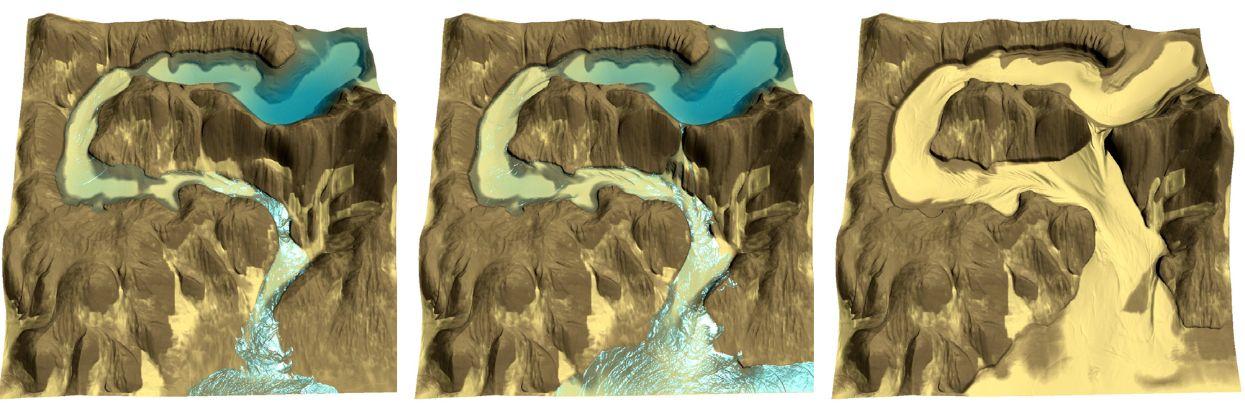

Procedural landscape generation often relies on a layered representation, where each point of a grid is mapped to a stack of different materials (dirt, water, sand, snow, etc.). The goal of this project is to implement this representation in order to first reproduce classical erosion mechanisms [Št’ava08] and then imagine your own phenomena, drawing inspiration from subsequent works such as avalanches [Cordonnier18] or desertscape [Paris19] simulations. The implementation must run at an interactive rate, and may include user input to add/remove matter to some layers (e.g. triggering rain around the mouse).

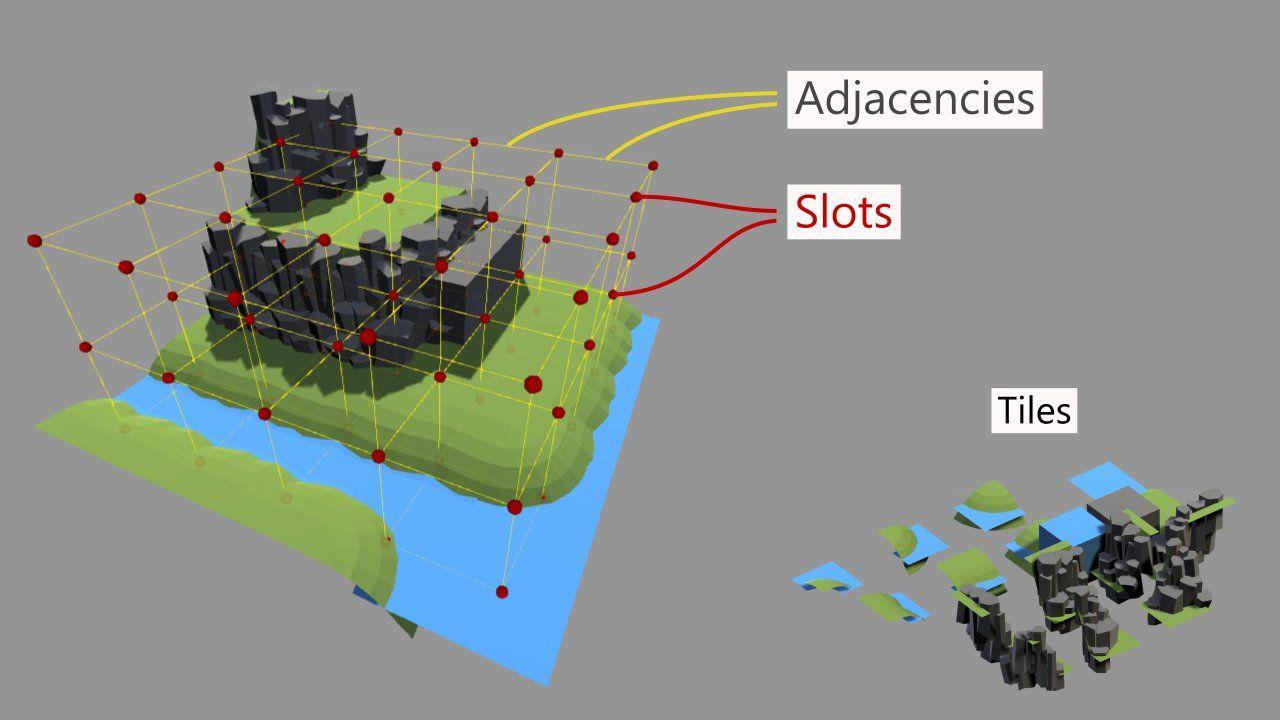

Wave Function Collapse (WFC) is a constraint-solving algorithm [Karth17] that was first designed for texture generation [Gumin16] but has been quickly used for enhancing 3D tile-based content generation [Stalberg18] (right-hand image above), in particular to mitigate the repetition effects that tile-based approaches usually suffer from. Given a collection of tiles and rules telling how they are allowed to neighbor each others, WFC assigns a tile to each slot. The goal of this project is to implement WFC, maybe first in 2D (like [Stalberg17]) and then in 3D, and finally generalize to irregular grids. You should also find tile sets for which it fails at converging in a reasonable time.

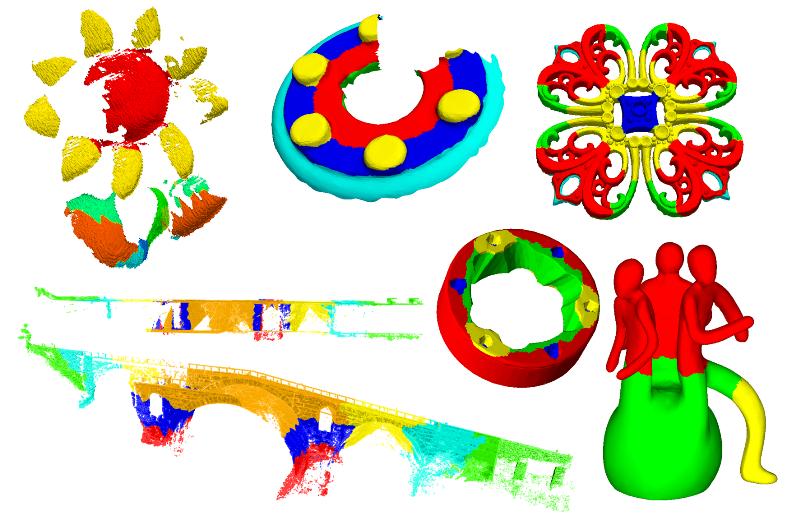

Le projet consiste en l’implémentation de la technique décrite dans l’article de référence, technique qui permet de détecter de manière robuste les symétries partielles dans les surfaces 3D. La technique se base sur une sorte de Hough Transform généralisée : Des paires de points sont utilisées comme candidates si il existe une transformation permettant de passer de l’un (point+normale) à l’autre (point+normale). Si une telle transformation existe, celle-ci est stockée dans un espace paramétrique. En répétant ce procédé, on peut détecter quelles transformations sont utilisées pour de nombreuses paires (à l’aide d’un Mean Shift dans l’espace paramétrique des transformations), et donc détecter quelles sont les symétries locales principales de l’objet.

Le projet consiste en l’implémentation de la technique décrite dans l’article de référence, technique qui permet de détecter de manière robuste les symétries dans les surfaces 3D. La technique se base sur la définition d’une distance de diffusion (voir images de gauche) associée à une fonction floue de similarité calculée sur les données brutes comportant potentiellement des symétries imparfaites ou des données manquantes.

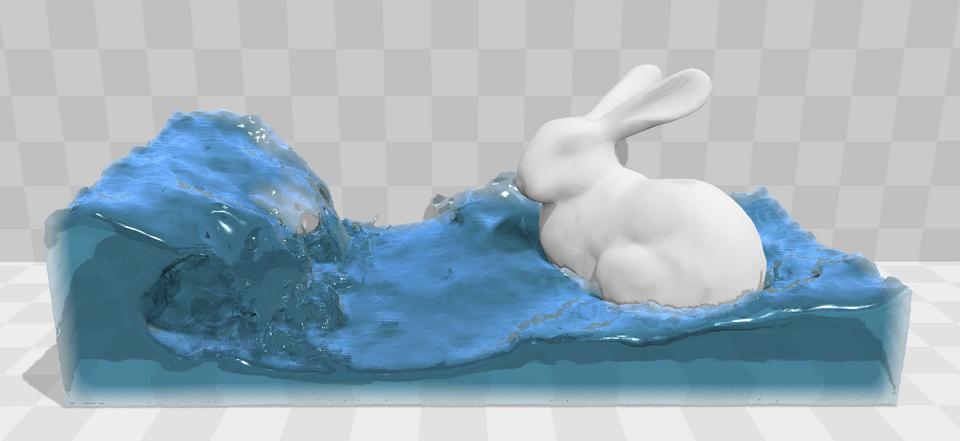

This project aims for a popular variant of particle-based liquid simulation method, Position Based Fluid (PBF). This requires essential programming/software skills for generating computer animations and good understanding of numerical fluid simulations, which solve the Navier-Stokes equations that model a variety of fluid flows. You are expected to perform autonomous study and research investigating how to generate liquid animations using computer.

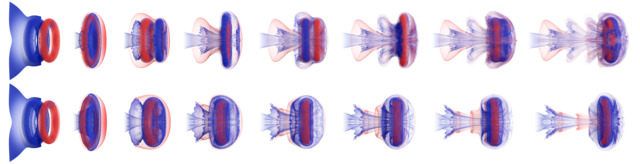

This project aims for an efficient and effective pipeline for producing smoke animations. This requires thorough understanding of partial differential equations, in particular, the Navier-Stokes equations that model a variety of fluid flows. You are expected to perform autonomous study and investigation of how to generate smoke animations using computer.

Just in case, you can find the previous topics in 2019-20 and 2018-19.