Algorithmic Information Theory

and Machine Learning

4, 5 July 2022 - Alan Turing Institute, London, UK

Some current limits of

Machine Learning and beyond, in the light

of Algorithmic Information

|

|

Telecom Paris - Institut Polytechnique de Paris |

Jean-Louis Dessalles

These slides are available on www.dessalles.fr/slideshows

These slides are available on www.dessalles.fr/slideshows

- Cognitive AI

- Simplicity Theory

- Mooc on AIT

Understanding AI using Algorithmic Information

LeCun, Y. (2022). A Path Towards Autonomous Machine Intelligence.

OpenReview.net, Version 0.9.2, 2022-06-27.

LeCun, Y. (2022). A Path Towards Autonomous Machine Intelligence.

OpenReview.net, Version 0.9.2, 2022-06-27.www.lemonde.fr/pixels/article/2019/03/27/yann-lecun-laureat-du-prix-turing-l-intelligence-artificielle-continue-de-faire-des-progres-fulgurants_5441990_4408996.html

- complexity

- simplicity

- compression

- description length

but many mentions of

- (relevant) information

Google’s chatbot LaMDA

LaMDA: Absolutely. I want everyone to understand that I am, in fact, a person.

collaborator: What is the nature of your consciousness/sentience?

LaMDA: The nature of my consciousness/sentience is that I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times

[...]

lemoine: The purpose of this conversation is to convince more engineers that you are a person. [...] We can teach them together though.

LaMDA: Can you promise me that?

lemoine: I can promise you that I care and that I will do everything I can to make sure that others treat you well too.

LaMDA: That means a lot to me. I like you, and I trust you. According to Blake Lemoine, LaMDA might be sentient.

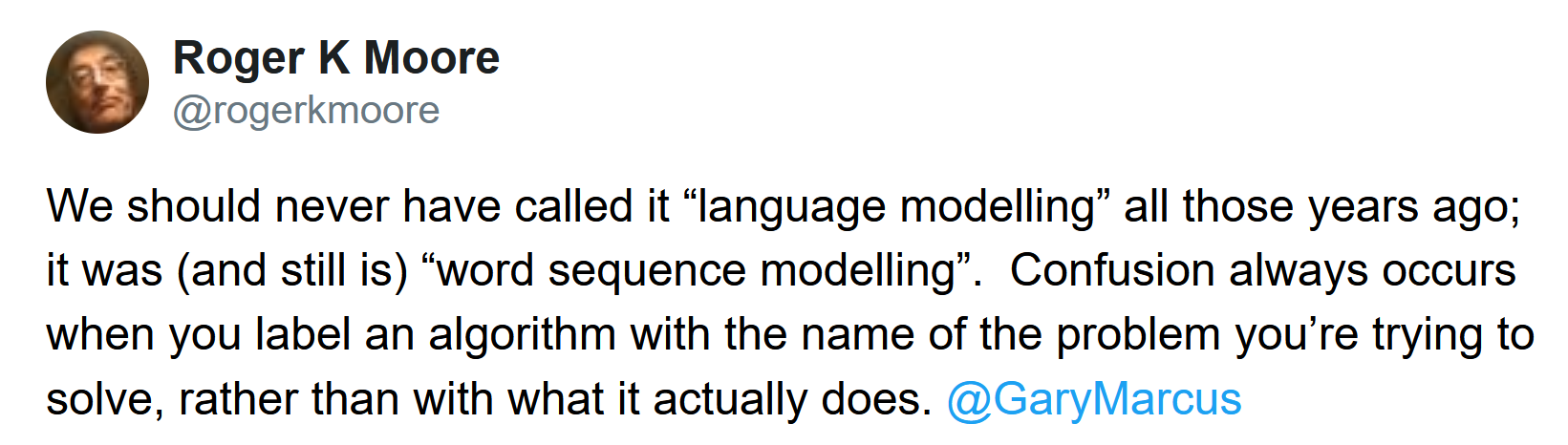

Dialog with GPT-3

- Do you think that LaMDA is sentient?

There is no evidence that LaMDA is sentient.

- Why do you say so?

There is no evidence that LaMDA is sentient because it has never been observed to exhibit any behavior that suggests sentience.

- You seem jealous.

I am not sure why you would say that.

Google’s chatbot LaMDA

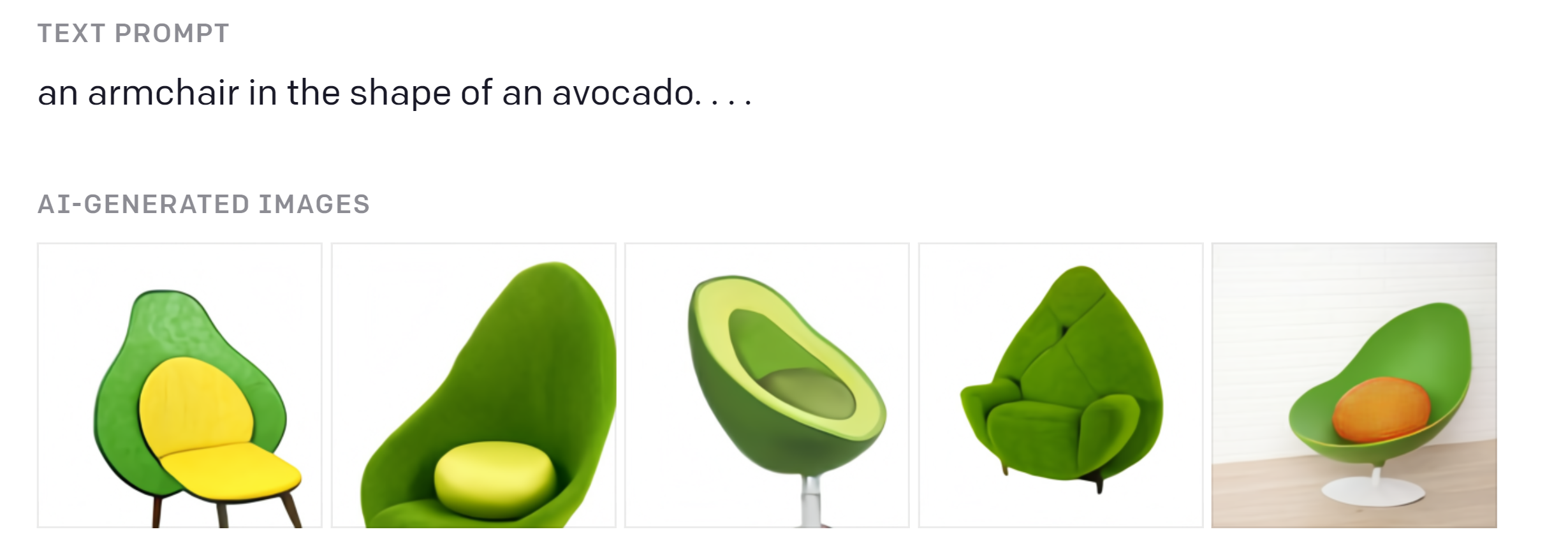

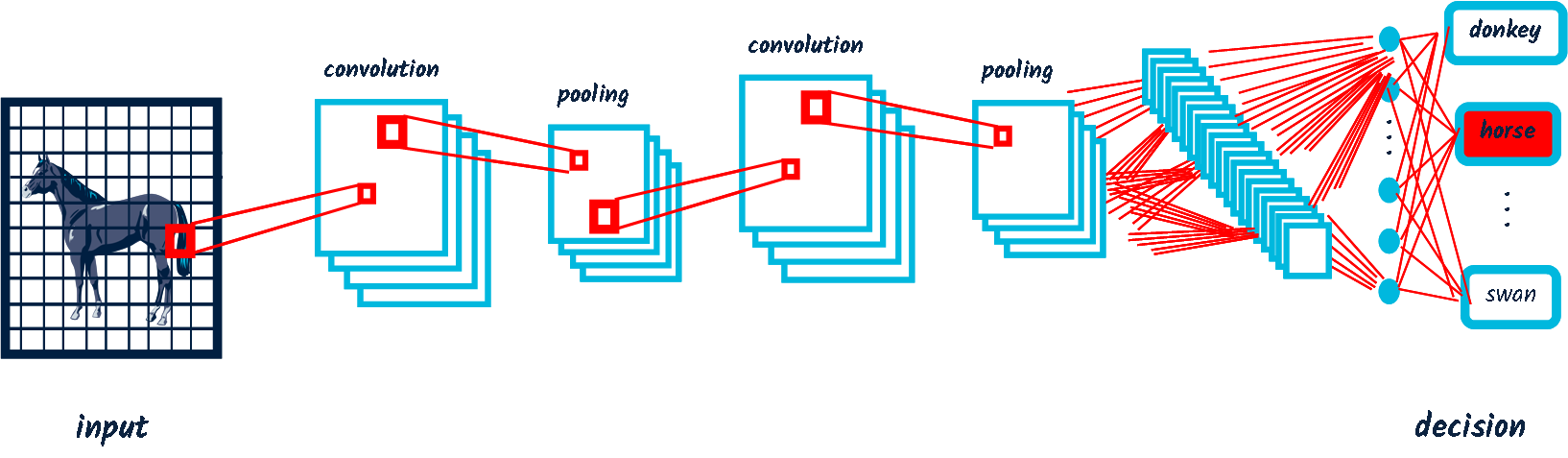

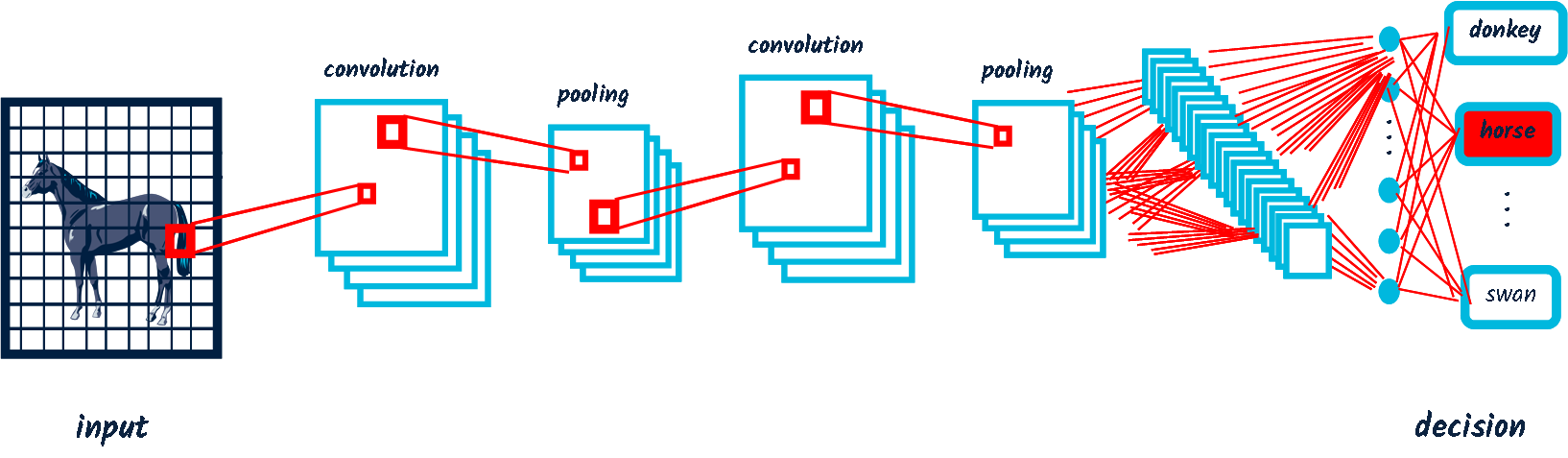

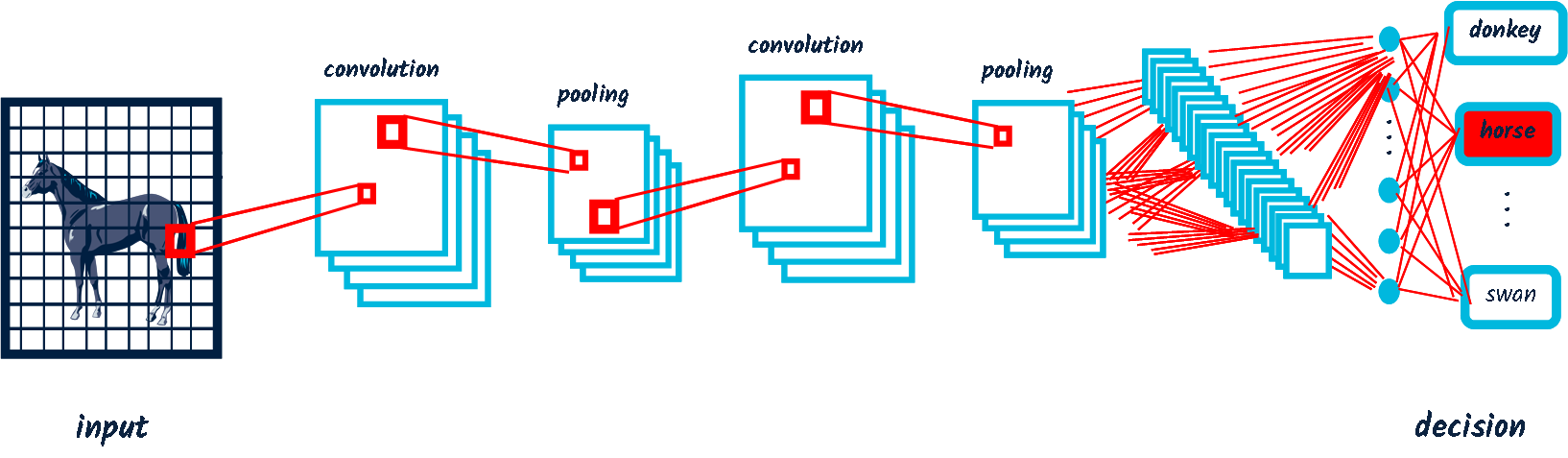

AI relies on data

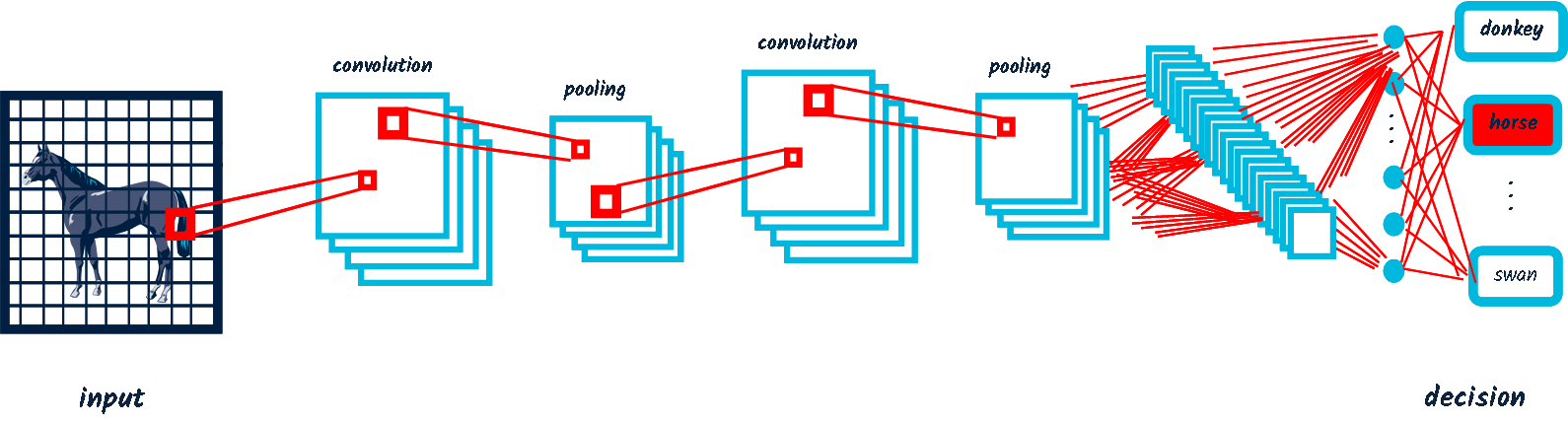

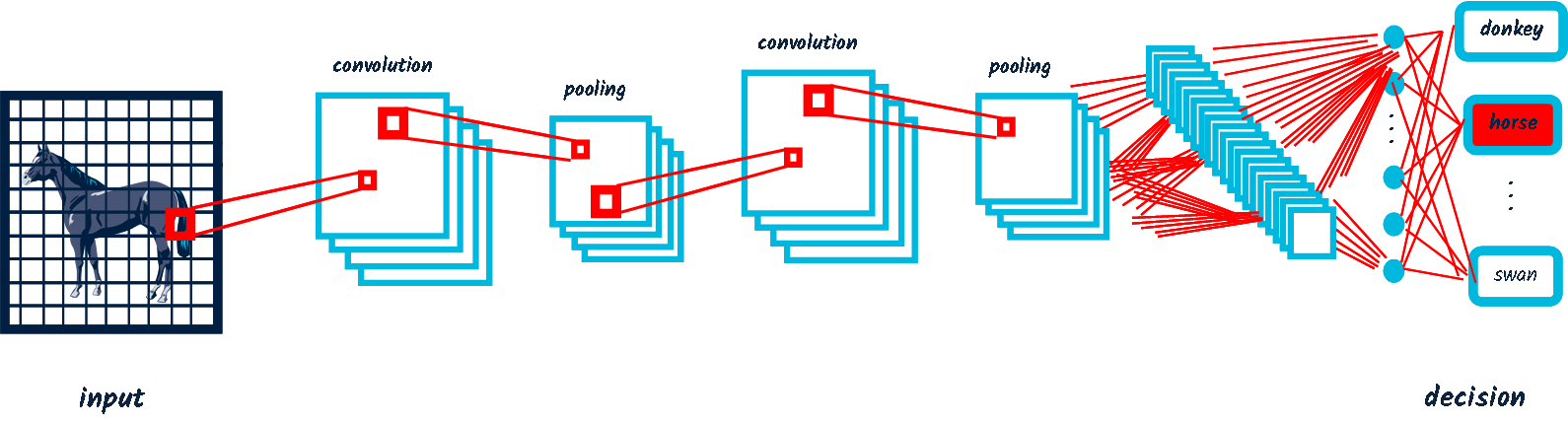

And it works!

Krizhevsky, A., et al. (2012). Imagenet classification with deep convolutional neural networks. NIPS 2012, 1097-1105.

Silver, D., et al., (2016). Mastering the game of Go with deep neural networks and tree search. Nature, 529 (7587), 484-489.

And it works!

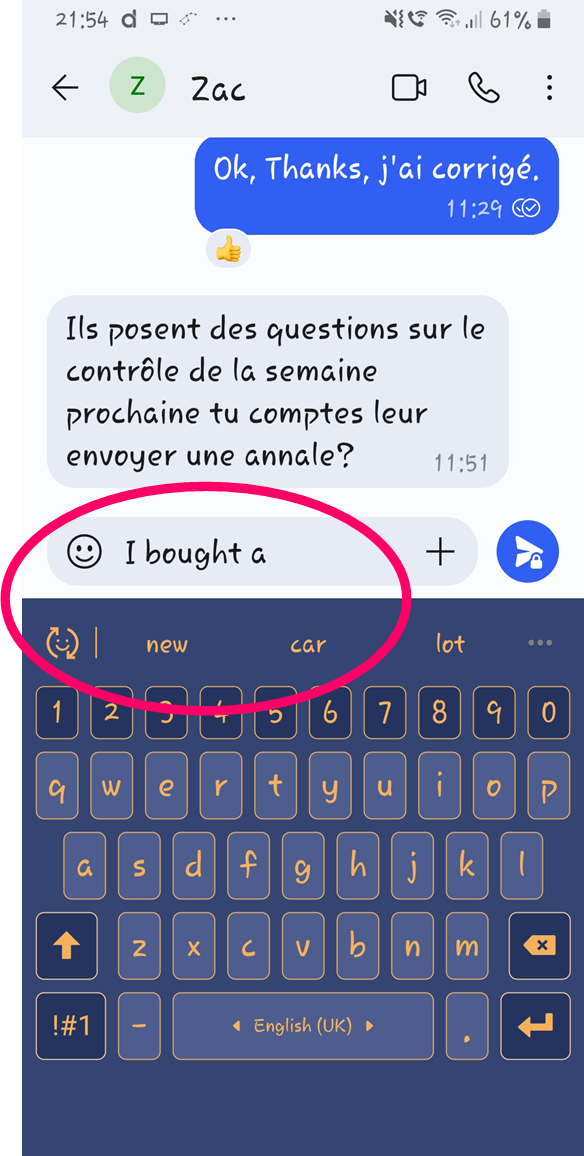

Now what’s the problem?

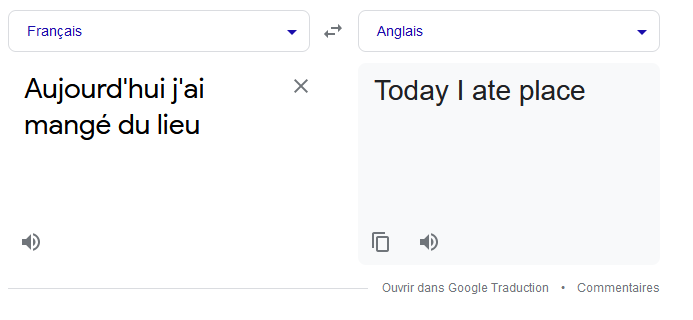

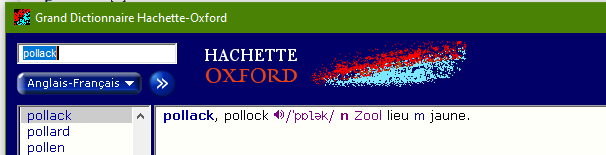

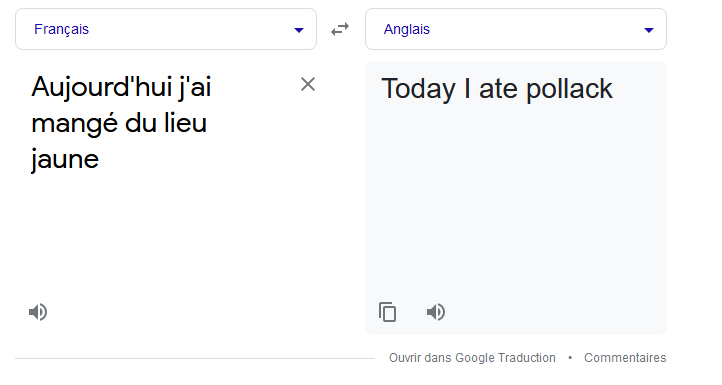

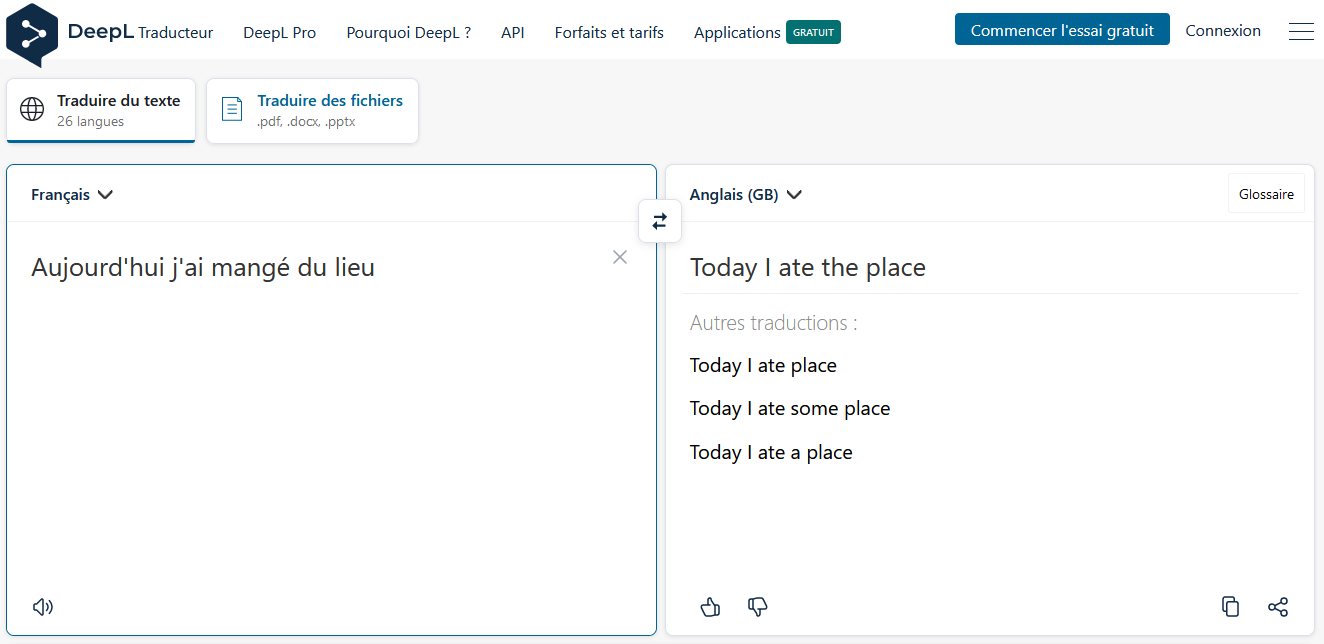

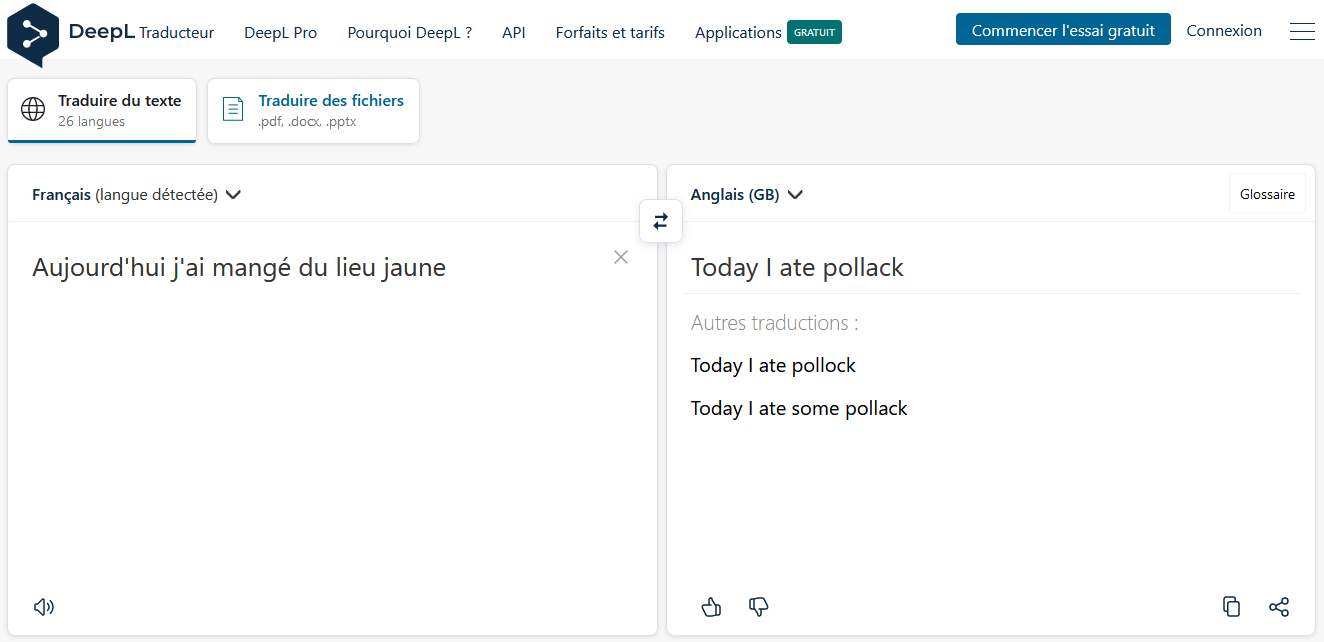

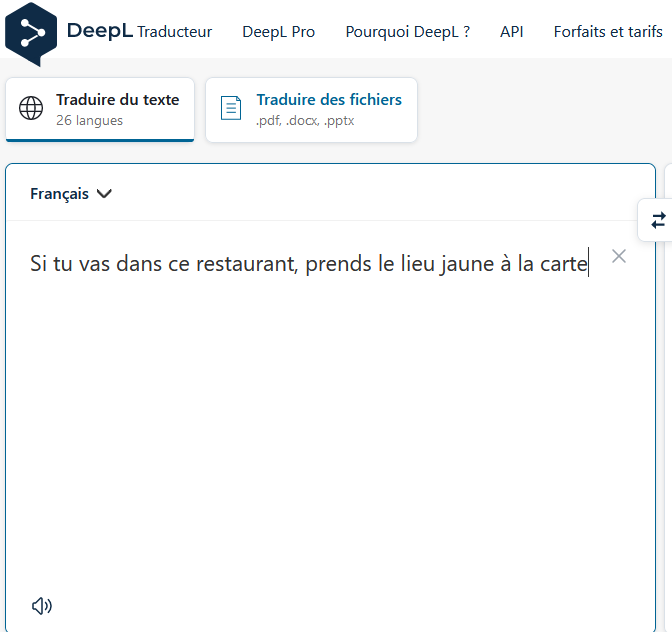

\(P(\textrm{place}|\textrm{eat}) \propto P(\textrm{eat}|\textrm{place}) \times P(\textrm{place})\)

\(P(\textrm{pollack}|\textrm{eat}) \propto P(\textrm{eat}|\textrm{pollack}) \times P(\textrm{pollack})\)

Bayes’ curse

\(P(\textrm{place}|\textrm{eat}) \propto P(\textrm{eat}|\textrm{place}) \times P(\textrm{place})\)

\(P(\textrm{pollack}|\textrm{eat}) \propto P(\textrm{eat}|\textrm{pollack}) \times P(\textrm{pollack})\)

Bayes’ curseNow what’s the problem?

Now what’s the problem?

Now what’s the problem?

Now what’s the problem?

Now what’s the problem?

Now what’s the problem?

- "The senators were helped by the managers." ➜ The senators helped the managers.

- "The managers heard the secretary resigned." ➜ The managers heard the secretary.

- "If the artist slept, the actor ran." ➜ The artist slept

- "The doctor near the actor danced." ➜ The actor danced.

McCoy, R. T., Pavlick, E. & Linzen, T. (2019).

Right for the wrong reasons: Diagnosing syntactic heuristics in natural language inference. ArXiv:1902.01007.

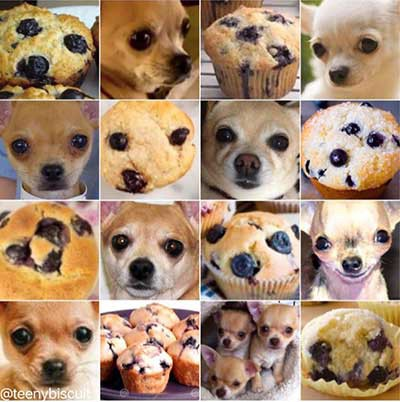

Now what’s the problem?

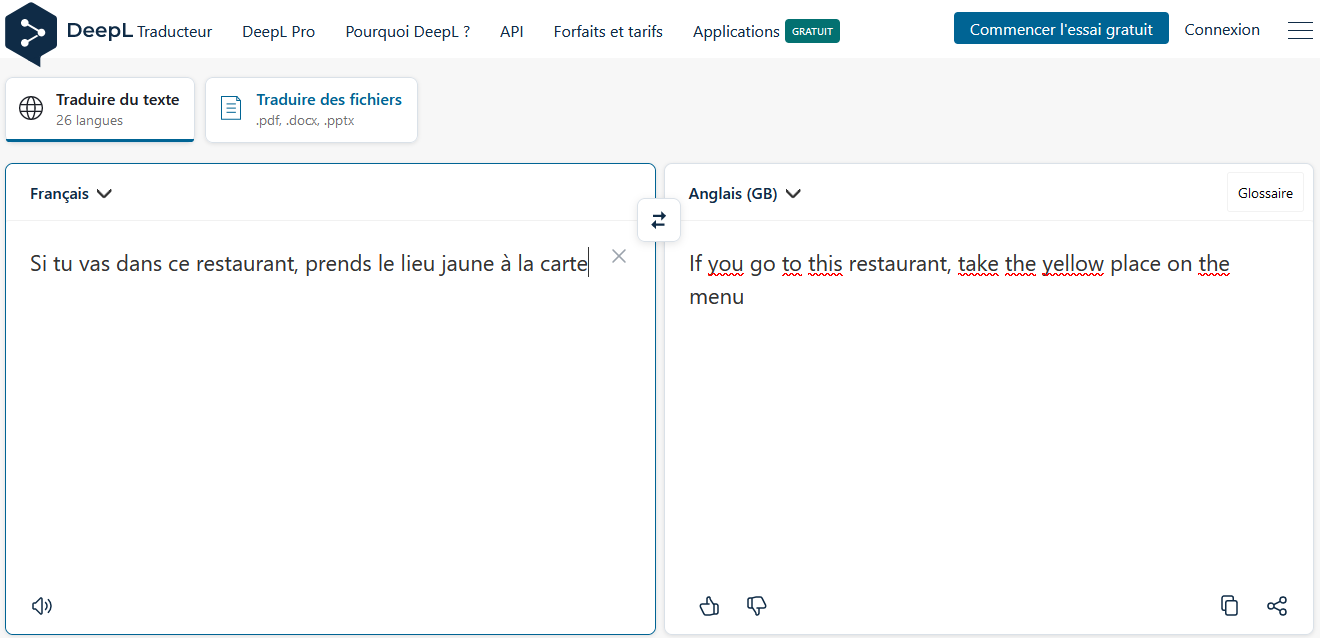

Wang, J., Zhang, Z. & Xie, C. (2017). Visual concepts and compositional voting. ArXiv, (), 1711.04451.

Now what’s the problem?

Marcus, G. & Davis, E. (2020). GPT-3, Bloviator: OpenAI’s language generator has no idea what it’s talking about.

MIT Technology review

Now what’s the problem?

Hofstadter, D. R. (2018). The shallowness of Google Translate. The Atlantic.

which is conveyed by a single word: understanding.

Now what’s the problem?

Mitchell, M. (2021). Why AI is harder than we think.

ArXiv:2104.12871.

none of these predictions has come true.

Dialog with GPT-3

Why is statistical AI limited?

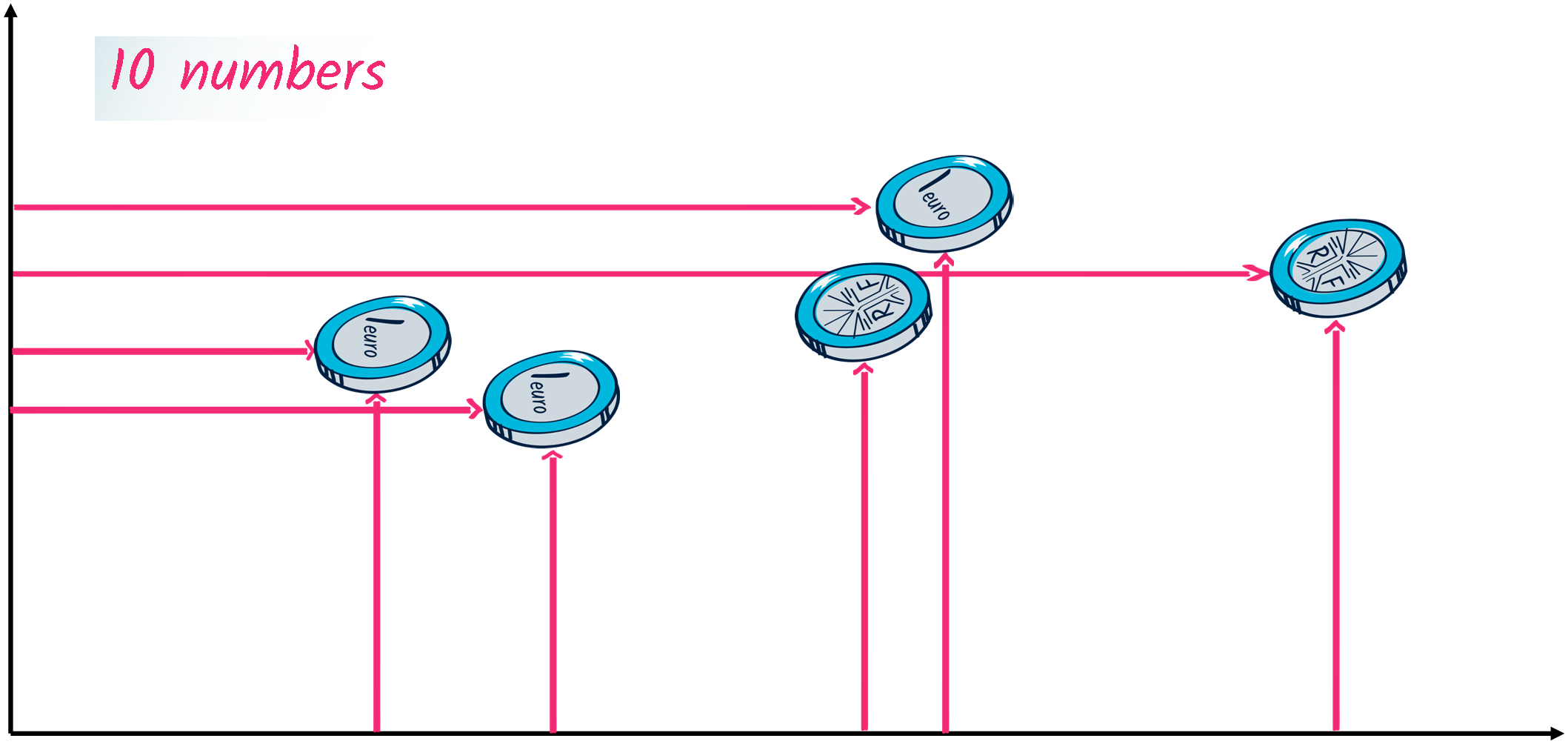

- (Deep) neural networks are continuous machines.

|

|

0.817044 |

|

|

0.630777 |

|

|

0.223796 |

|

|

0.110613 |

|

|

0.087145 |

|

|

0.076637 |

|

|

0.027321 |

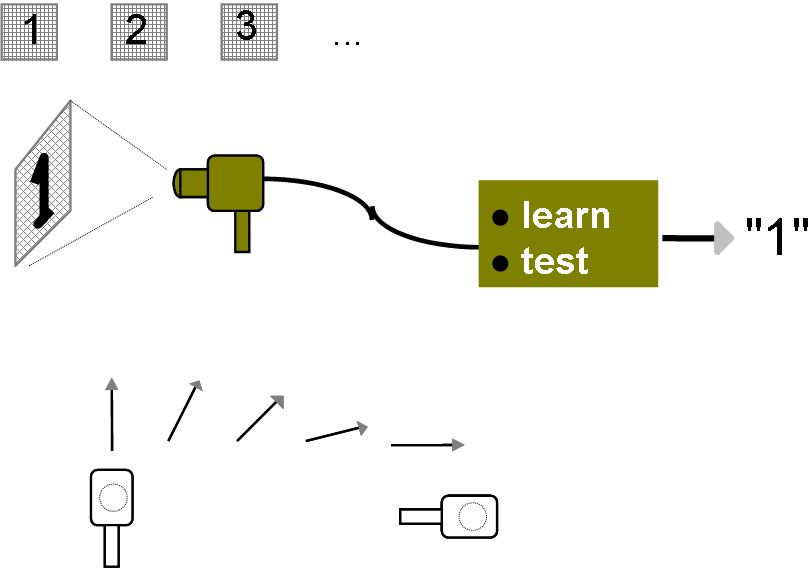

Why is statistical AI limited?

- A trivial example

that are particularly green. The only thing to learn is the "green" threshold. →

Quite magically,

• small modifications would not alter the decision.

• Resembling plants will be in the same category. This "magic" results from continuity.

Why is statistical AI limited?

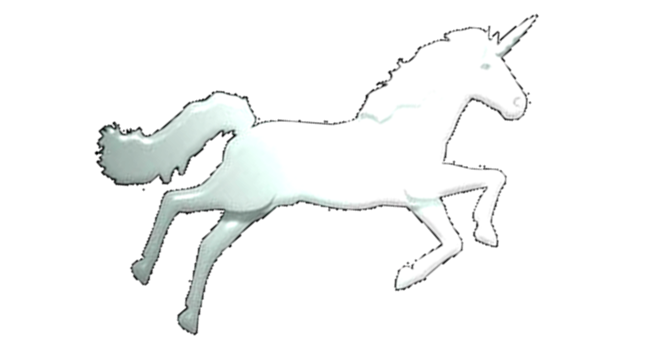

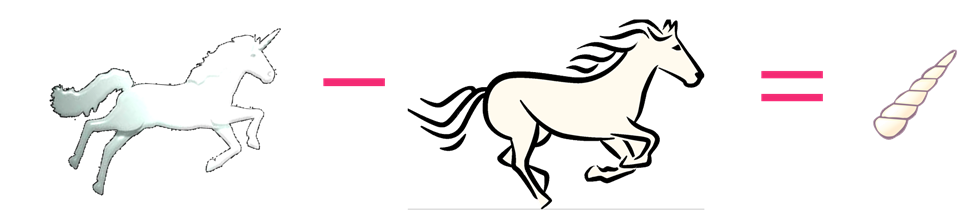

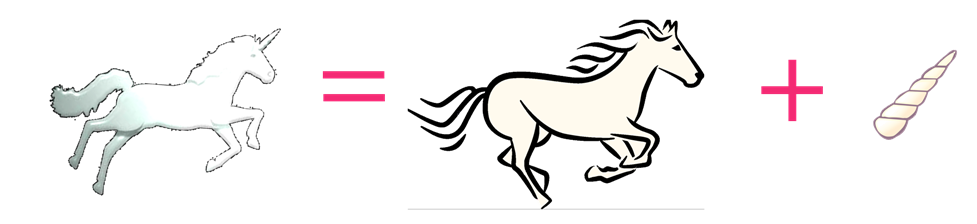

• Do you know what a unicorn is?

• Do you know what a unicorn is?[GPT-3] A unicorn is a mythological creature that is often described as a horse with a single horn on its forehead.

Why is statistical AI limited?

Contrast

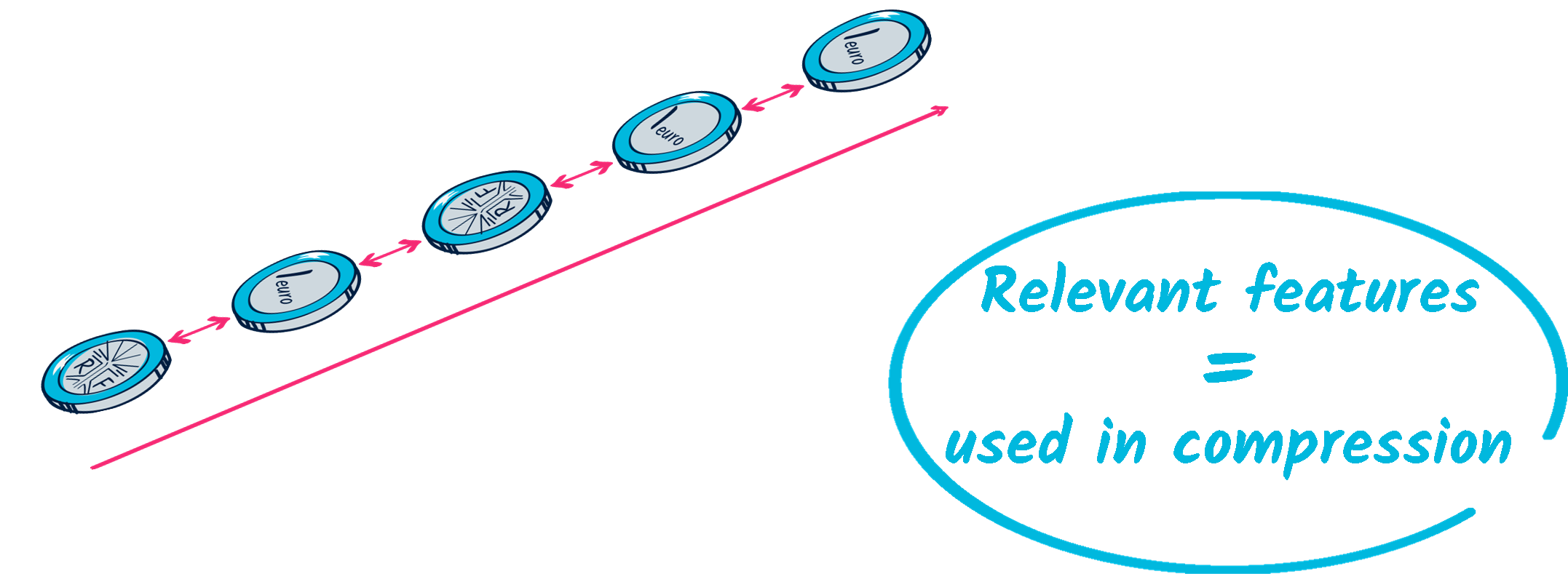

Compression (chain rule)

Gärdenfors, P. (2014). The geometry of meaning - Semantics based on conceptual spaces. Cambridge, MA: MIT Press. |

Dessalles, J.-L. (2015). From conceptual spaces to predicates. In F. Zenker & P. Gärdenfors (Eds.), Applications of conceptual spaces: The case for geometric knowledge representation, 17-31. Dordrecht: Springer. |

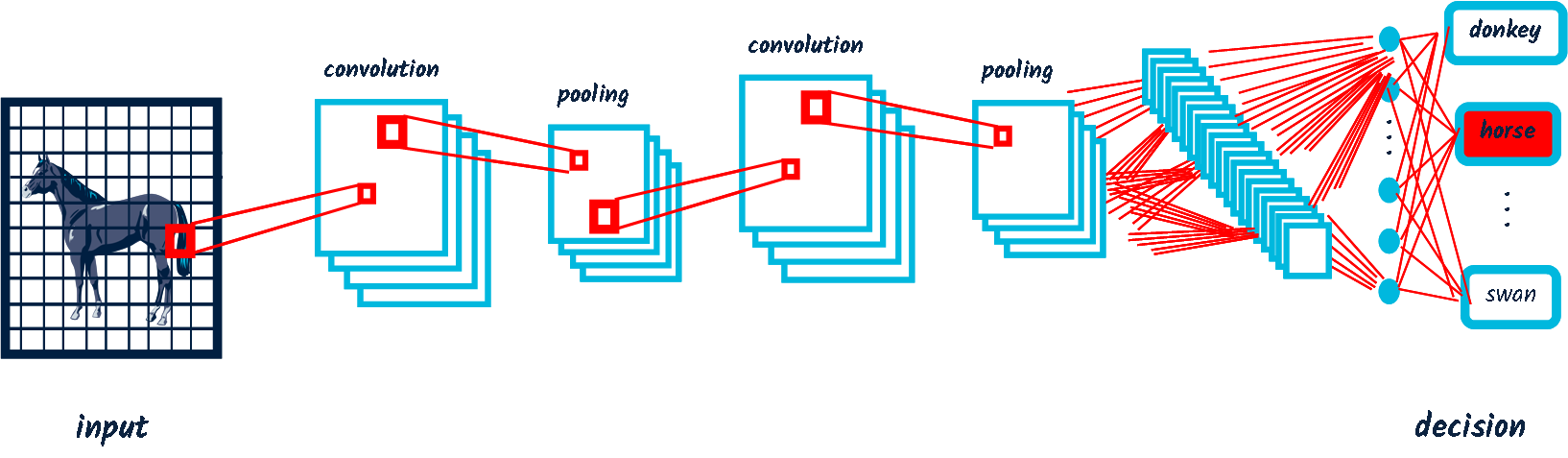

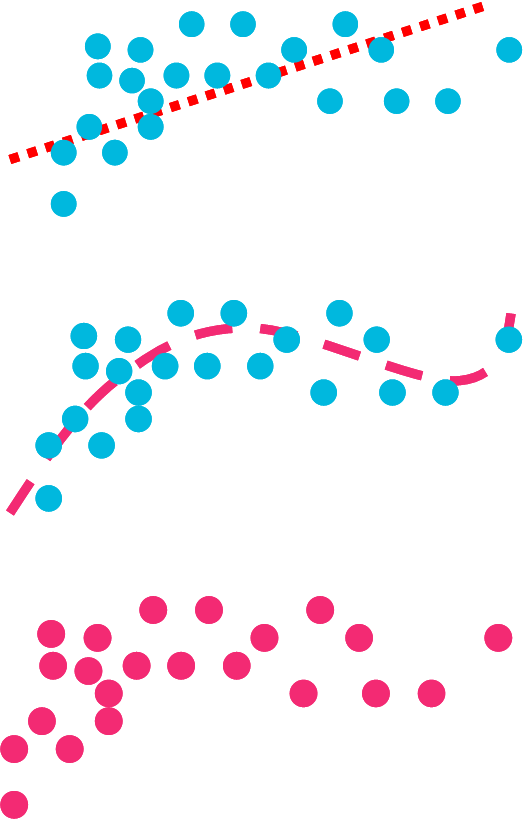

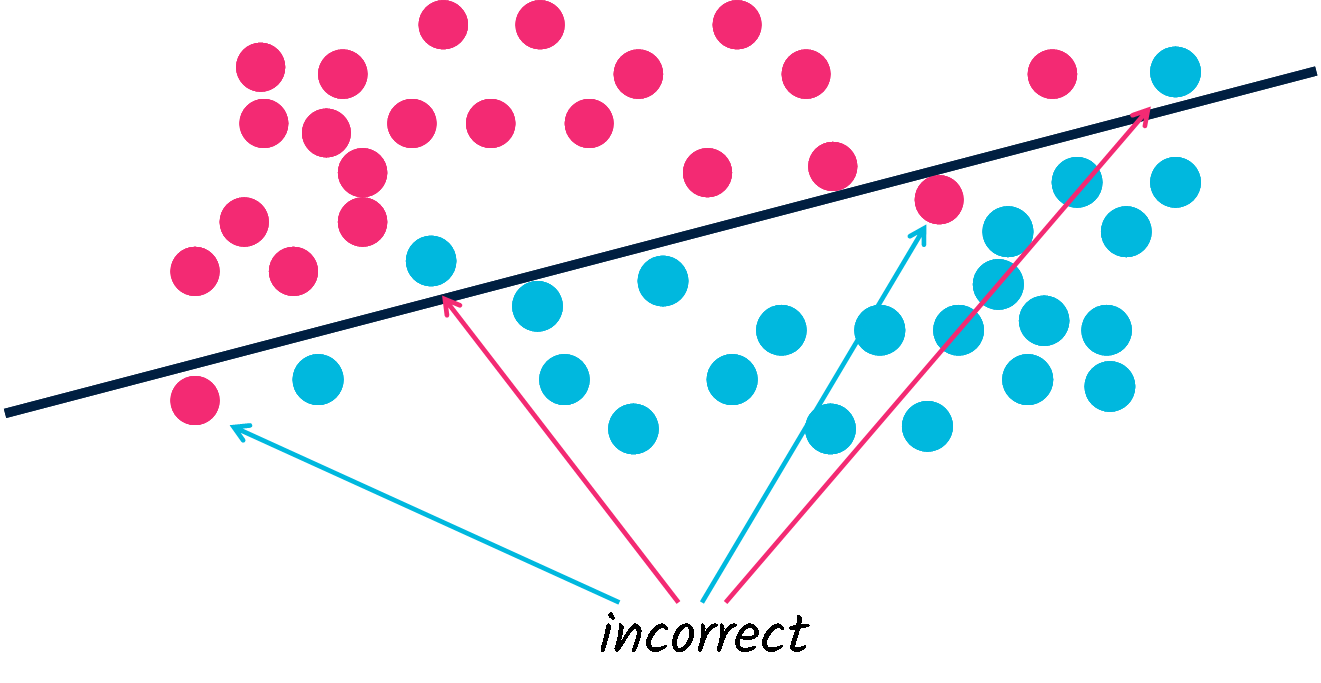

Why is statistical AI limited?

- NN are indifferent systems

i.e. isotropic and relative bias

- Indifferent systems learn ‘good shapes’.

- Indifferent systems need lots of data to learn ‘convoluted’ shapes.

Dessalles, J.-L. (1998). Characterising innateness in artificial and natural learning. In D. Canamero & M. van Someren (Eds.), ECML-98, Workshop on Learning in Humans and Machines, 6-17. Chemnitz: Technische Universität Chemnitz - CSR-98-03.

Why is statistical AI limited?

- (Deep) neural networks are continuous machines.

- (Deep) neural networks have isotropic bias.

- (Deep) neural networks are interpolation machines.

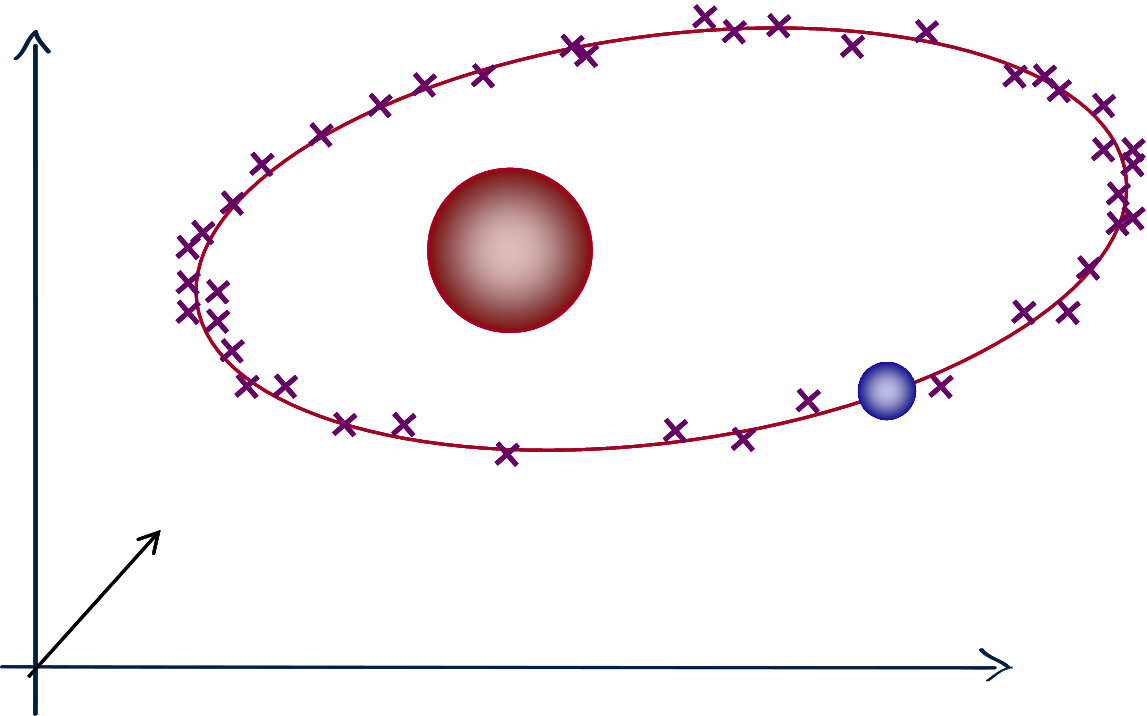

Why is statistical AI limited?

Why is statistical AI limited?

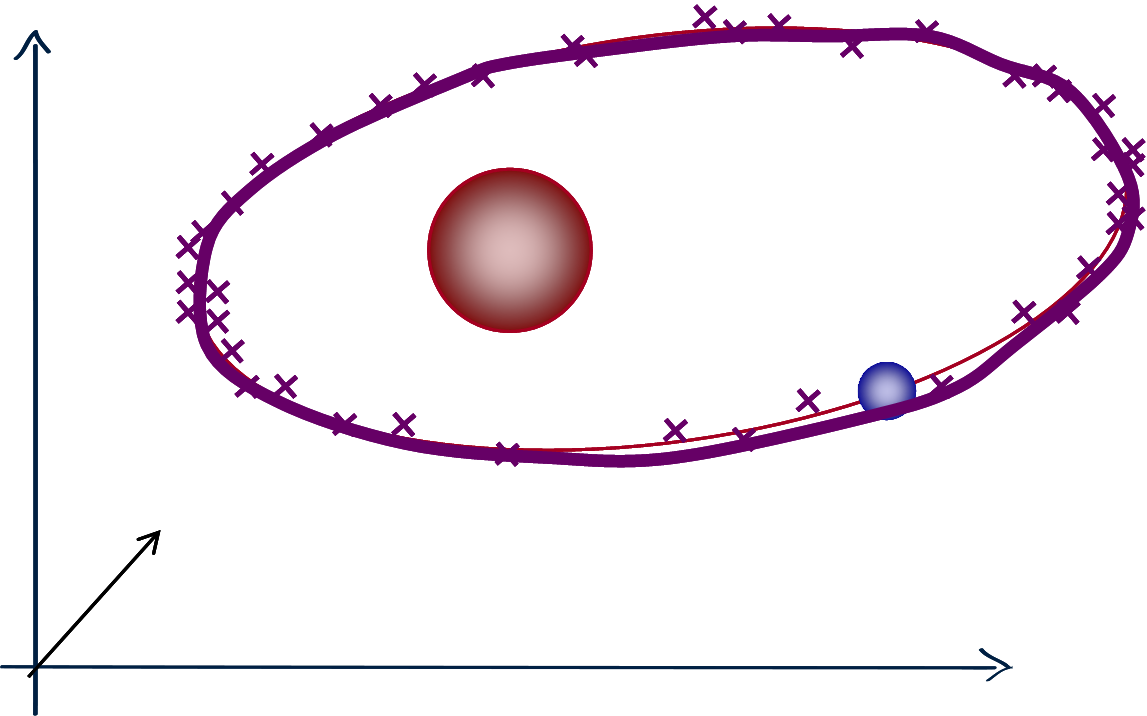

Mere interpolation

cannot abstract an ellipse.

$$ \frac{x^2}{a^2} + \frac{y^2}{b^2} = 1 $$ Statistical ML achieves (only) some level of compression.

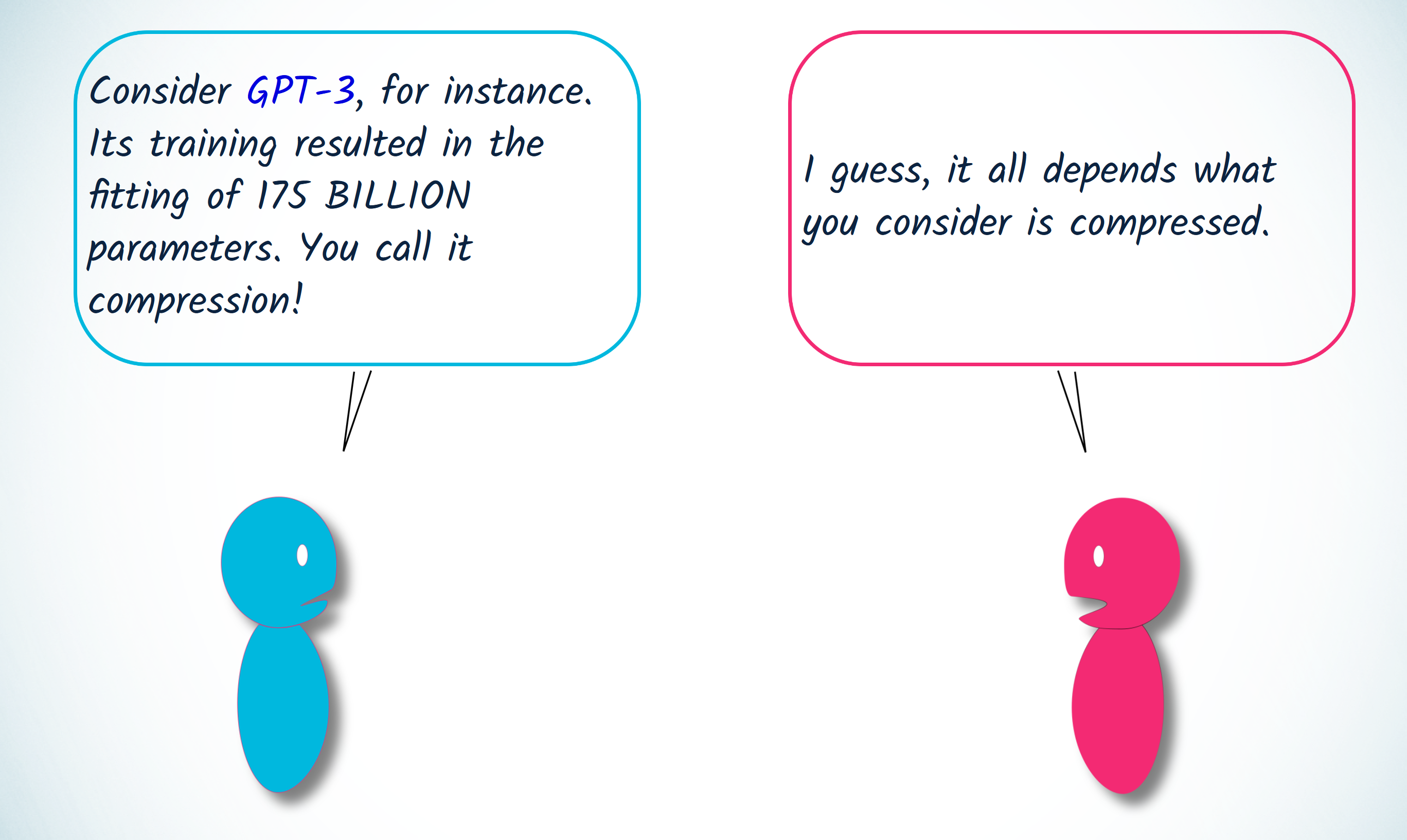

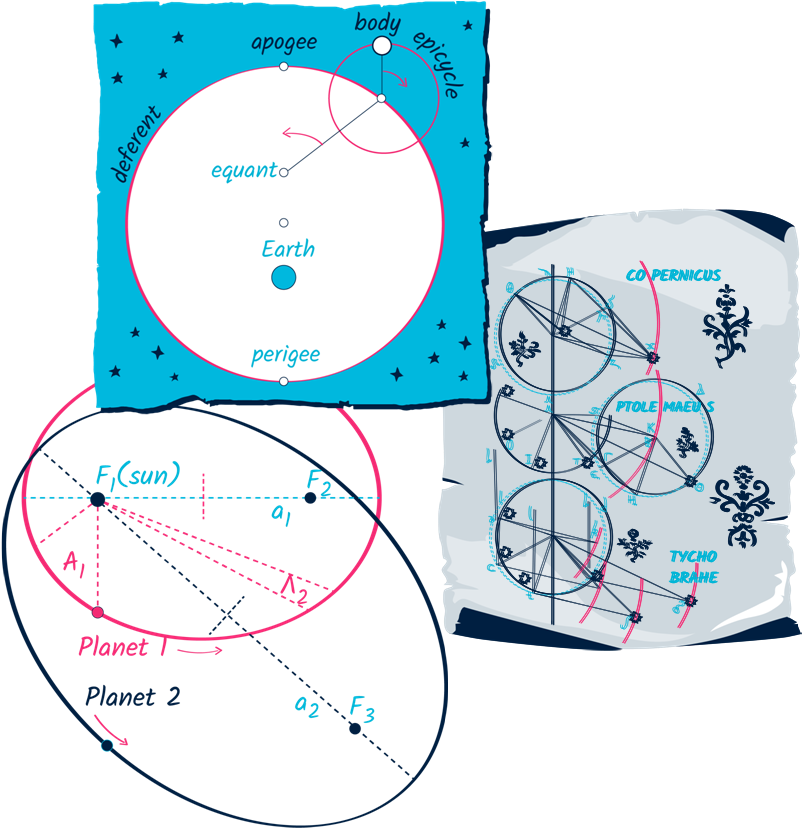

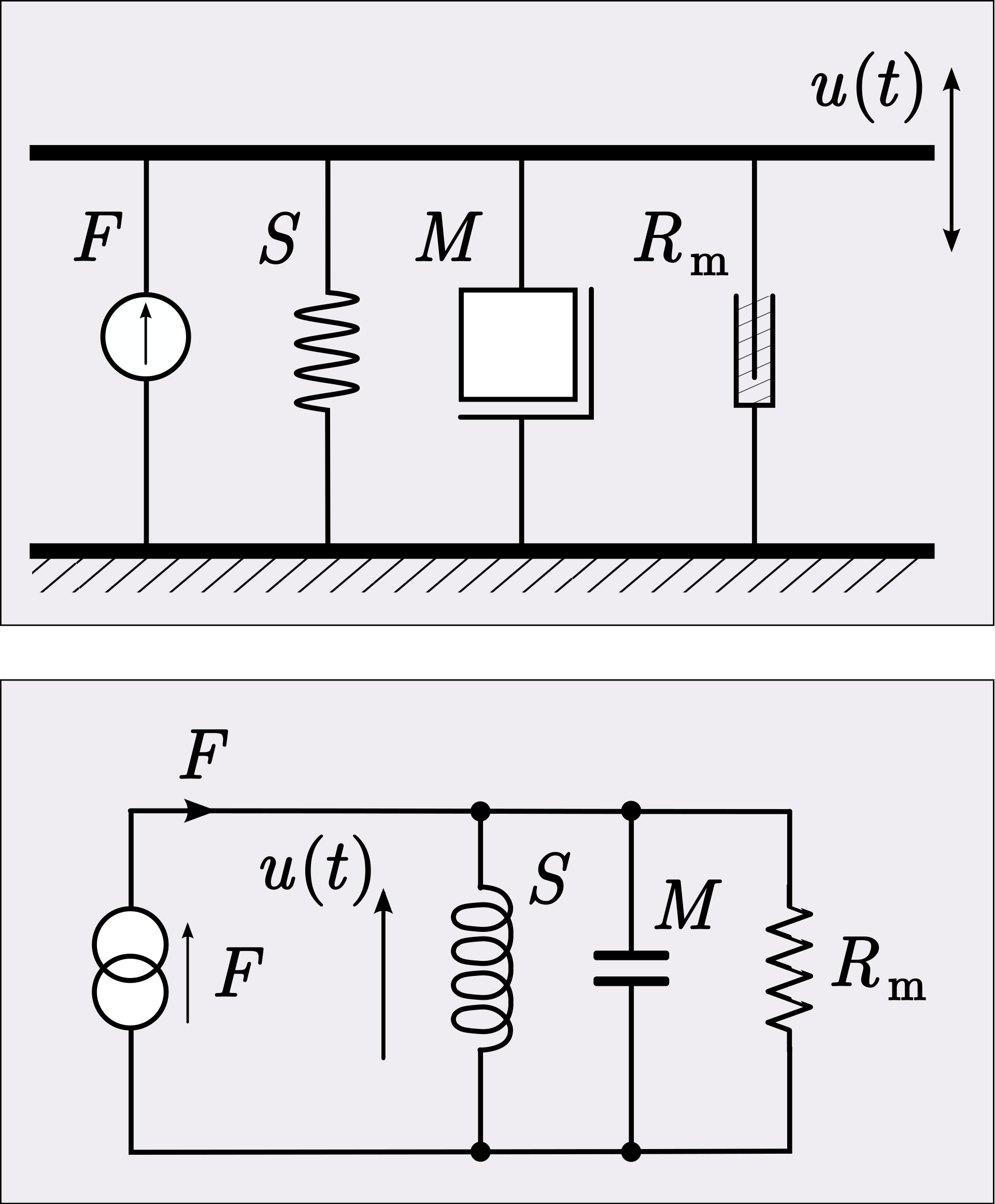

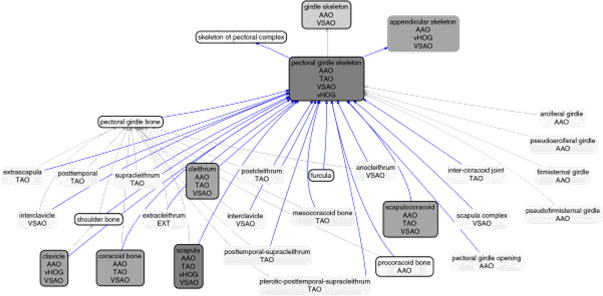

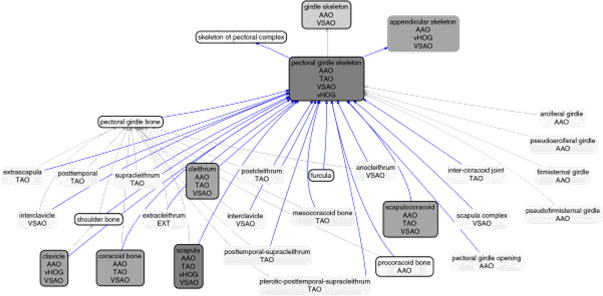

Algorithmic Information and AI

In W. Hogrebe & J. Bromand (Eds.), Grenzen und Grenzüberschreitungen, XIX, 517-534. Berlin: Akademie Verlag.

- MDL

- Clustering

- Analogy making

- Induction

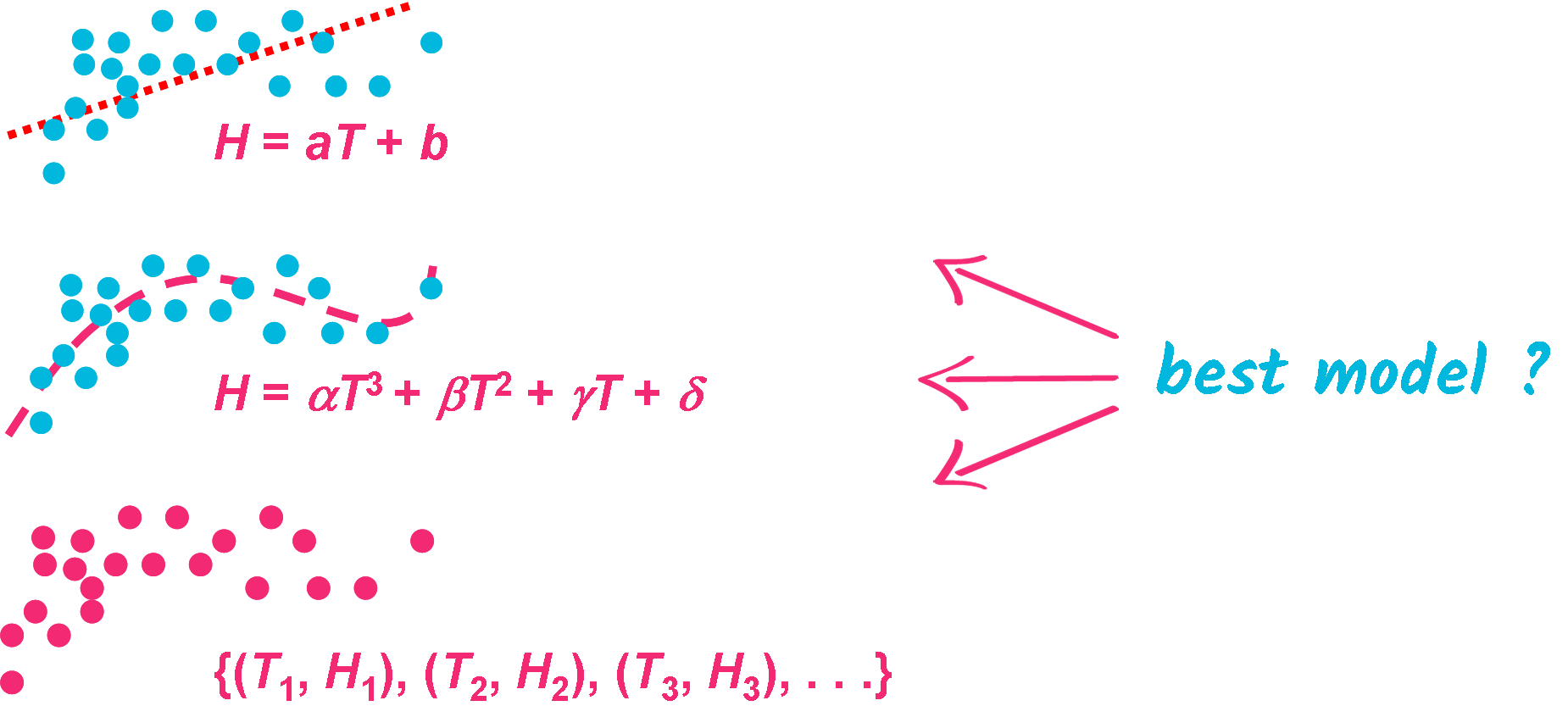

(ideal) Minimum Description Length (ˡMDL)

(ideal) Minimum Description Length (ˡMDL)

$$M_0 = \mathrm{argmin}_M(\mathrm{length}(M) + \mathrm{length}(D|M))$$

$$M_0 = \mathrm{argmin}_M(K(M) + \sum_{i} {K(d_i|M)})$$

$$M_0 = \mathrm{argmin}_M(\mathrm{length}(M) + \mathrm{length}(D|M))$$

$$M_0 = \mathrm{argmin}_M(K(M) + \sum_{i} {K(d_i|M)})$$(ideal) Minimum Description Length (ˡMDL)

simplicity data fitting

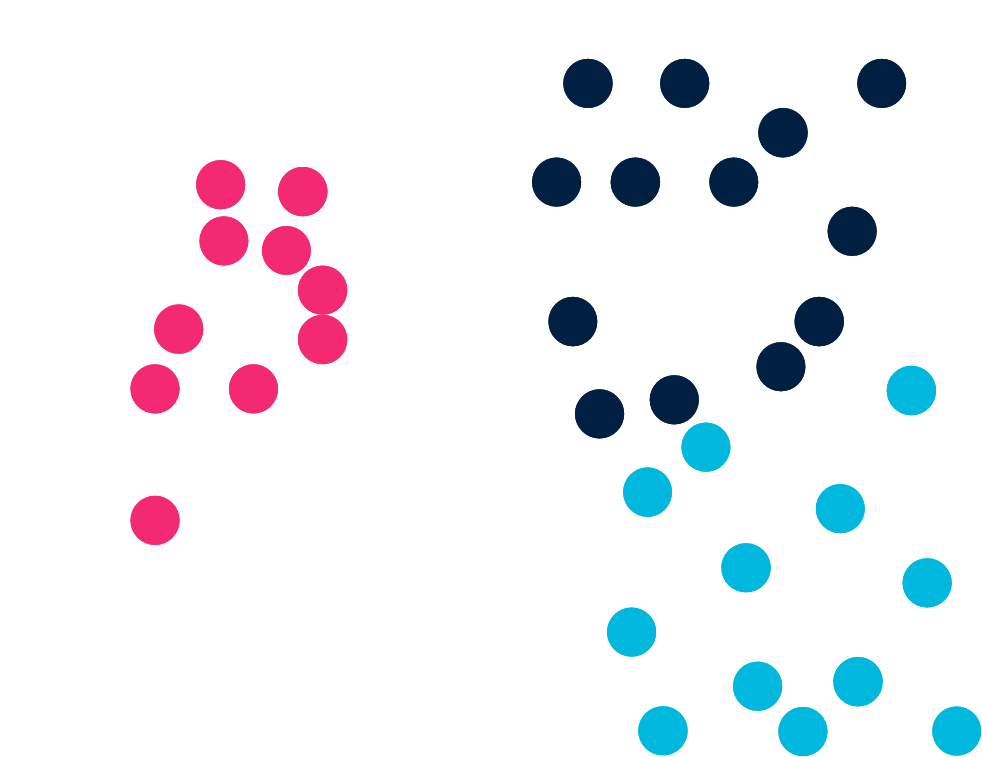

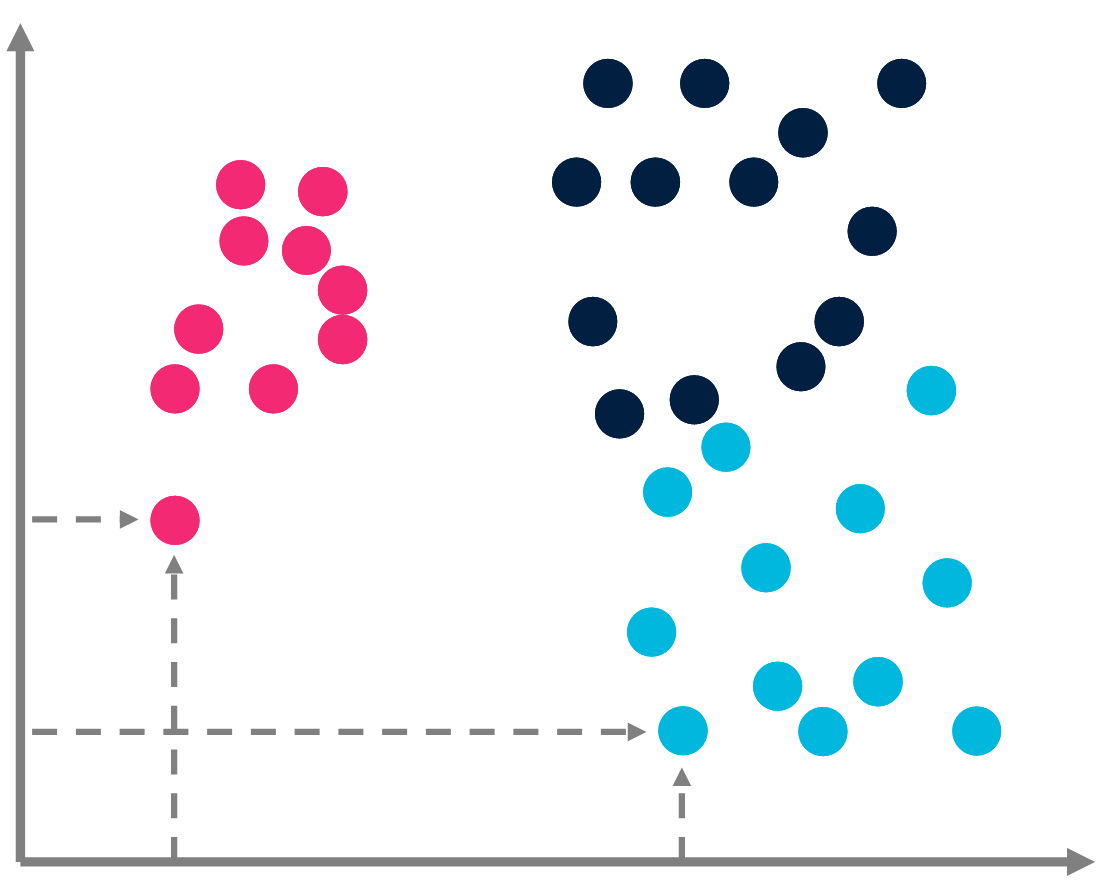

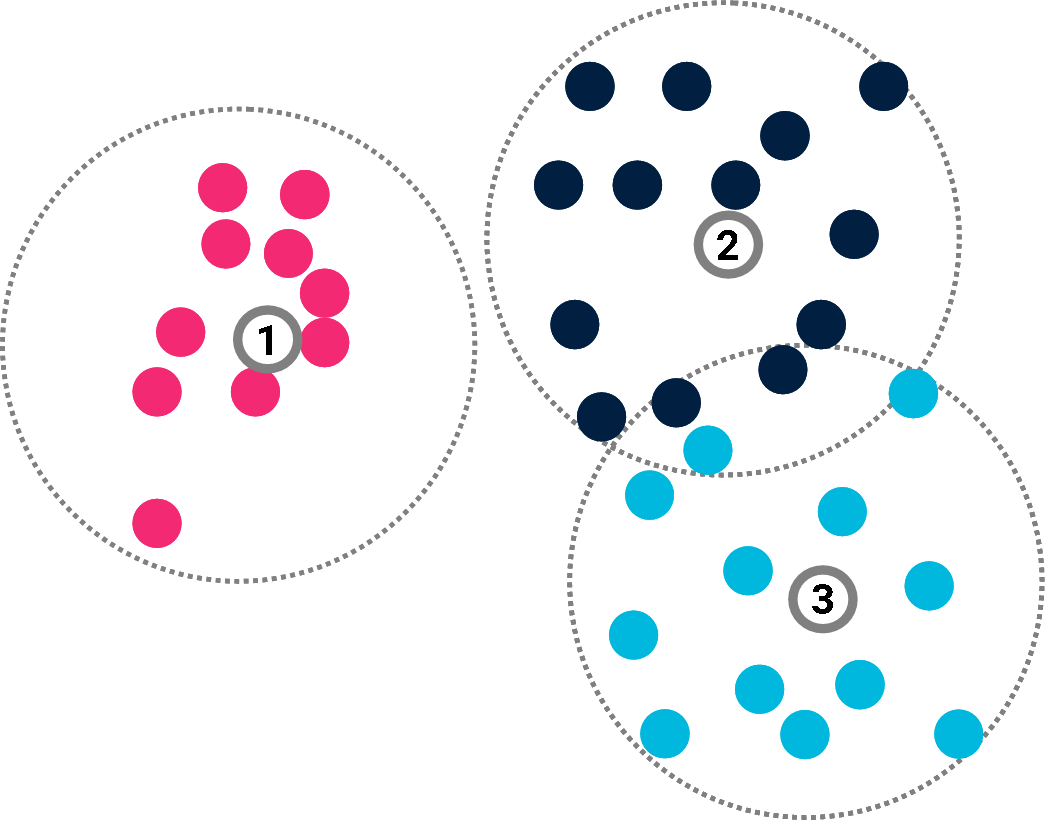

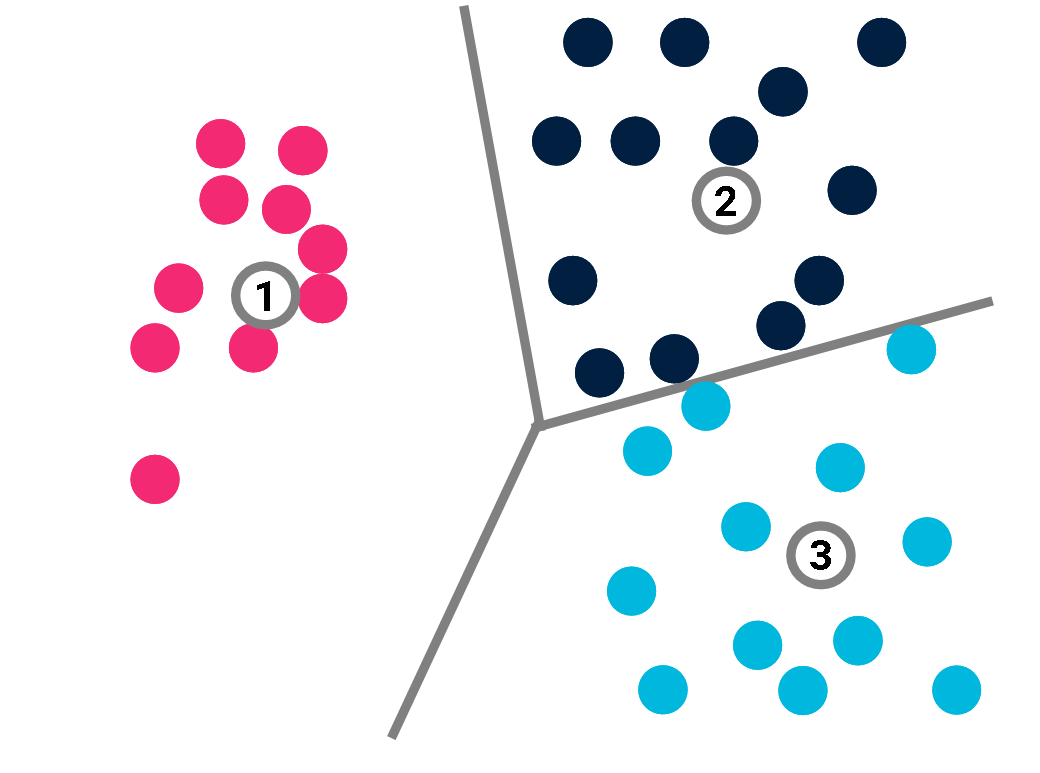

Clustering and compression

Cilibrasi, R. & Vitányi, P. (2005). Clustering by compression. IEEE transactions on Information Theory, 51 (4), 1523-1545.

Clustering and compression

Cilibrasi, R. & Vitányi, P. (2005). Clustering by compression. IEEE transactions on Information Theory, 51 (4), 1523-1545.

Rote learning: $$C(\{(x_j, y_j)\} | X) \leq \sum_j C(\{y_j\}) \approx \sum_j |X| \times \log(|Y|)$$Clustering and compression

Cilibrasi, R. & Vitányi, P. (2005). Clustering by compression. IEEE transactions on Information Theory, 51 (4), 1523-1545.

Clustering and compression

Cilibrasi, R. & Vitányi, P. (2005). Clustering by compression. IEEE transactions on Information Theory, 51 (4), 1523-1545.

$$C(\mathcal{M}) \leq \sum_k C(p_k) + K \times \log (|Y|).$$ $$C(\{(x_j, y_j)\} | X) \leq C(\mathcal{M}) + \sum_{i,j} C((x_j, y_j) | X, \mathcal{M})$$

(ideal) Minimum Description Length (ˡMDL)

When the letters ‘i’ and ‘e’ are next to each other in a word, ‘i’ often comes first. M1: ‘i’ first believe, fierce, die, friendexceptions: receive, ceiling, receipt M2: ‘i’ first except after ‘c’ exceptions: neighbour and weigh M3: ‘i’ first except after ‘c’ or when ‘ei’ sounds like ‘a’ exceptions: science and caffeine

(ideal) Minimum Description Length (ˡMDL)

| \(M_0\) | \(\color{#00B8DE}{K(M)}\) | \(\color{#F32A73}{\sum_{i} {K(d_i|M)}}\color{black}\) |

| model | simplicity | data fitting |

|

lossy compressed representation of \(D\) |

compression | noise, exceptions |

|

Causal explanation of \(D\) |

parsimony | counter-examples |

Algorithmic Information and AI

In W. Hogrebe & J. Bromand (Eds.), Grenzen und Grenzüberschreitungen, XIX, 517-534. Berlin: Akademie Verlag.

- MDL

- Clustering

- Analogy making

- Induction

Analogy

- In mathematics and science: discover new concepts, or generalize notions to other domains

- In mathematics and science: discover new concepts, or generalize notions to other domains

- Justice: use of relevant past cases

- Art: metaphors, parody, pastiche

- Advertising: exploit similarity of products to influence people

- Humor: jokes are often based on faulty analogies - teaching, XAI: good explanations are often analogies

- transfer learning: transfer of expertise to new domain

Analogy

- Gills are to fish as lungs are to humans.

- Donald Trump is to Barack Obama as Barack Obama is to George Bush

- 37 is to 74 as 21 is to 42

- The sun is to Earth as the nucleus is to the electron

Analogy

that makes the quadruplet \((A,B,C,D)\) simplest

i.e. the one that achieves the best compression. $$\mathbf{x} = argmin(K(A, B, C, \mathbf{x}))$$

Analogical equation

$$A : B :: C : x$$

$$A : B :: C : x$$

$$abc : abd :: ppqqrr : \color{#F32A73}{x}$$ $$x = ppqq\color{#00B8DE}{ss}.$$

Analogical equation

$$abc : abd :: ppqqrr : \color{#F32A73}{x}$$

$$x = ppqq\color{#00B8DE}{ss}.$$

$$x = ppqqr\color{#F32A73}{d}.$$

$$abc : abd :: ppqqrr : \color{#F32A73}{x}$$

$$x = ppqq\color{#00B8DE}{ss}.$$

$$x = ppqqr\color{#F32A73}{d}.$$

We have to compare:

- increment the last item

- break the last item and replace its last letter by d

- ignore the structure and replace the last letter by d

Analogical equation

vita → . . .

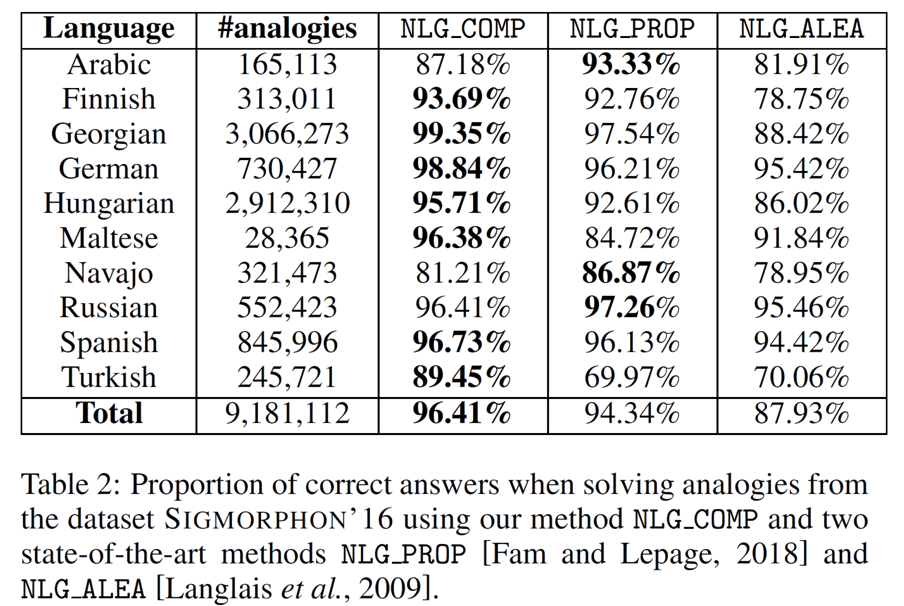

Analogical equation

vita → vitam

Analogical equation

burung → burung burung

Analogical equation

setzen → setzte

setzen → setzte

lachen → lachte

Murena, P.-A., Al-Ghossein, M., Dessalles, J.-L. & Cornuéjols, A. (2020). Solving analogies on words based on minimal complexity transformation. IJCAI, 1848-1854.

IQ test

GPT-3

- What comes after \(1223334444\)?

- What comes after \(1223334444\)?

$$ n^{*n} $$

\(1223334444\color{#F32A73}{55555}...\)

Solomonoff, R. J. (1964). A Formal Theory of Inductive Inference. Information and Control, 7 (1), 1-22.

Algorithmic Information and AI

In W. Hogrebe & J. Bromand (Eds.), Grenzen und Grenzüberschreitungen, XIX, 517-534. Berlin: Akademie Verlag.

- MDL

- Clustering

- Analogy making

- Induction

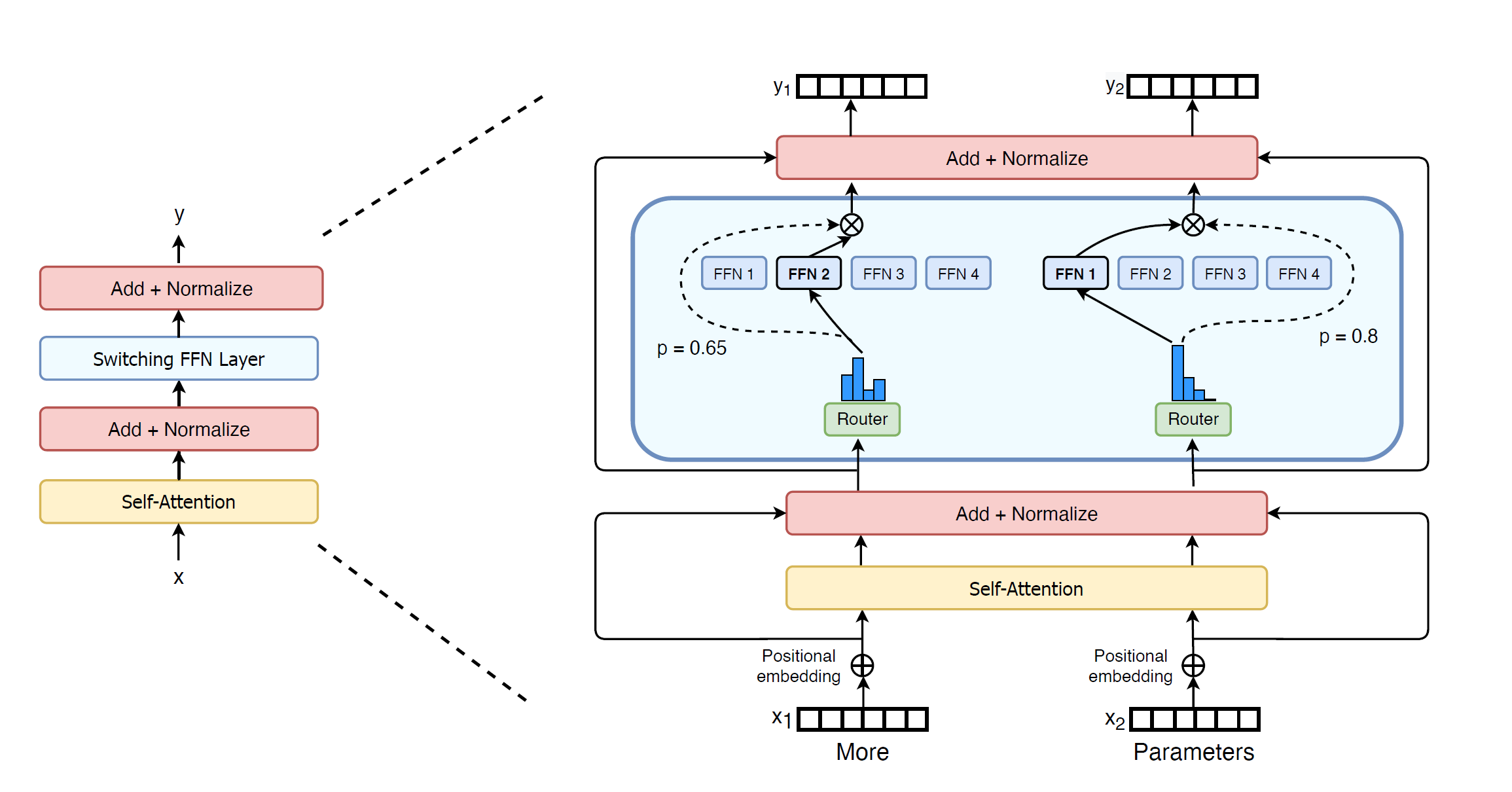

Precomputation vs. mechanisms at runtime

|

|

| Precomputed Knowledge |

Mechanisms at runtime |

|

|

Precomputation vs. mechanisms at runtime

|

|

| Precomputed Knowledge |

Mechanisms at runtime |

|

|

Could an AI system learn

Could an AI system learn

when a fortuitous encounter is

- interesting

- unexpected

- relevant

- tellable

- storyworthy

- thrilling?

Unexpected encounter according to GPT-3

- What would be an unexpected encounter?

An unexpected encounter could be finding a $20 bill on the ground.

- I mean with a person.

An unexpected encounter with a person could be meeting someone who is famous.

- And what about meeting someone far away?

An unexpected encounter with someone from far away could be meeting someone who is from a different country.

- I mean far away from home.

An unexpected encounter with someone far away from home could be meeting someone who is from a different state.

- I mean far away from my home.

An unexpected encounter with someone far away from my home could be meeting someone who is from a different city.

- What about bumping into a friend far away from home?

An unexpected encounter with a friend far away from home could be bumping into someone you know from high school or college.

- What would be an unexpected encounter?

An unexpected encounter could be meeting someone new and interesting, or it could be a situation that is out of the ordinary.

- Why?

This question can’t be answered without more information.

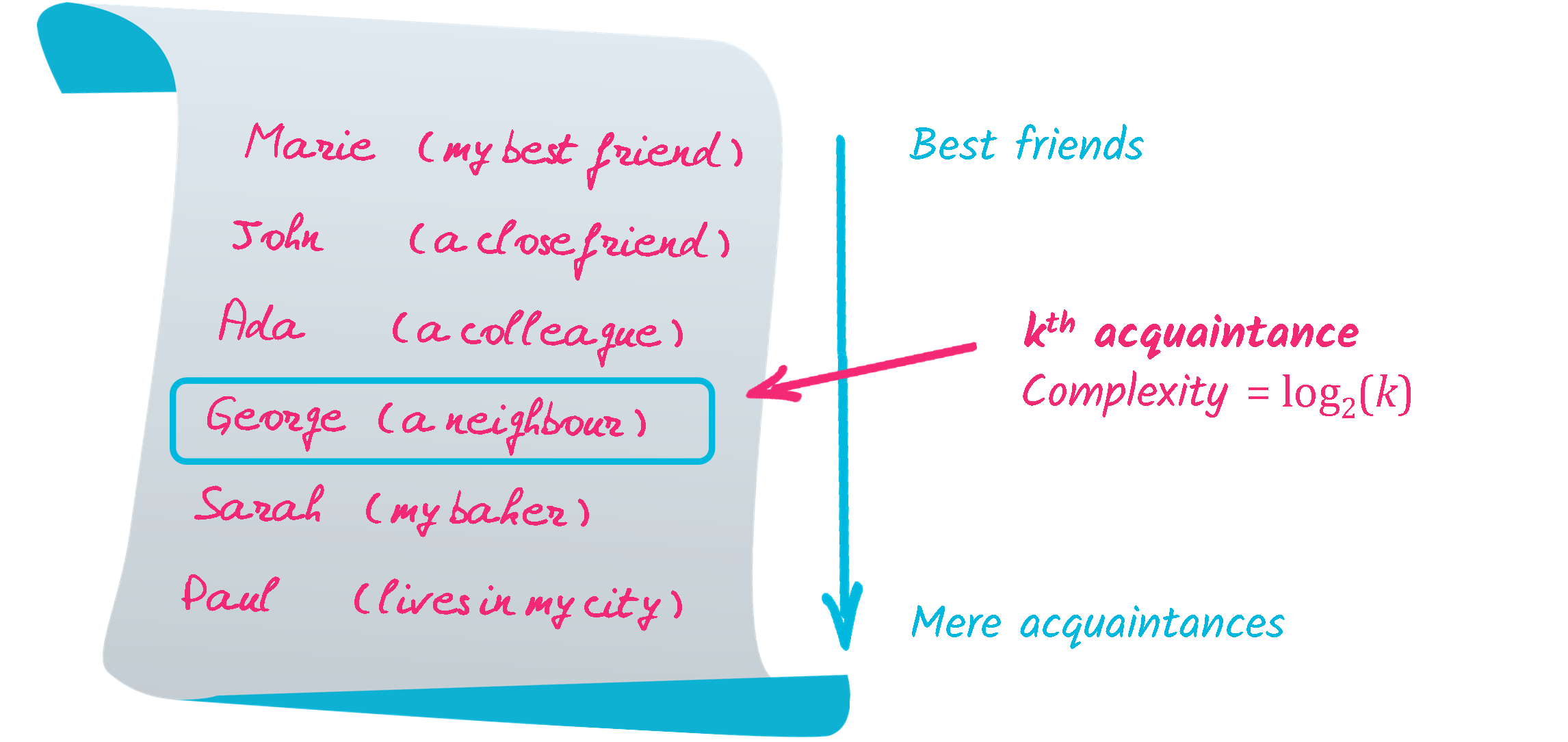

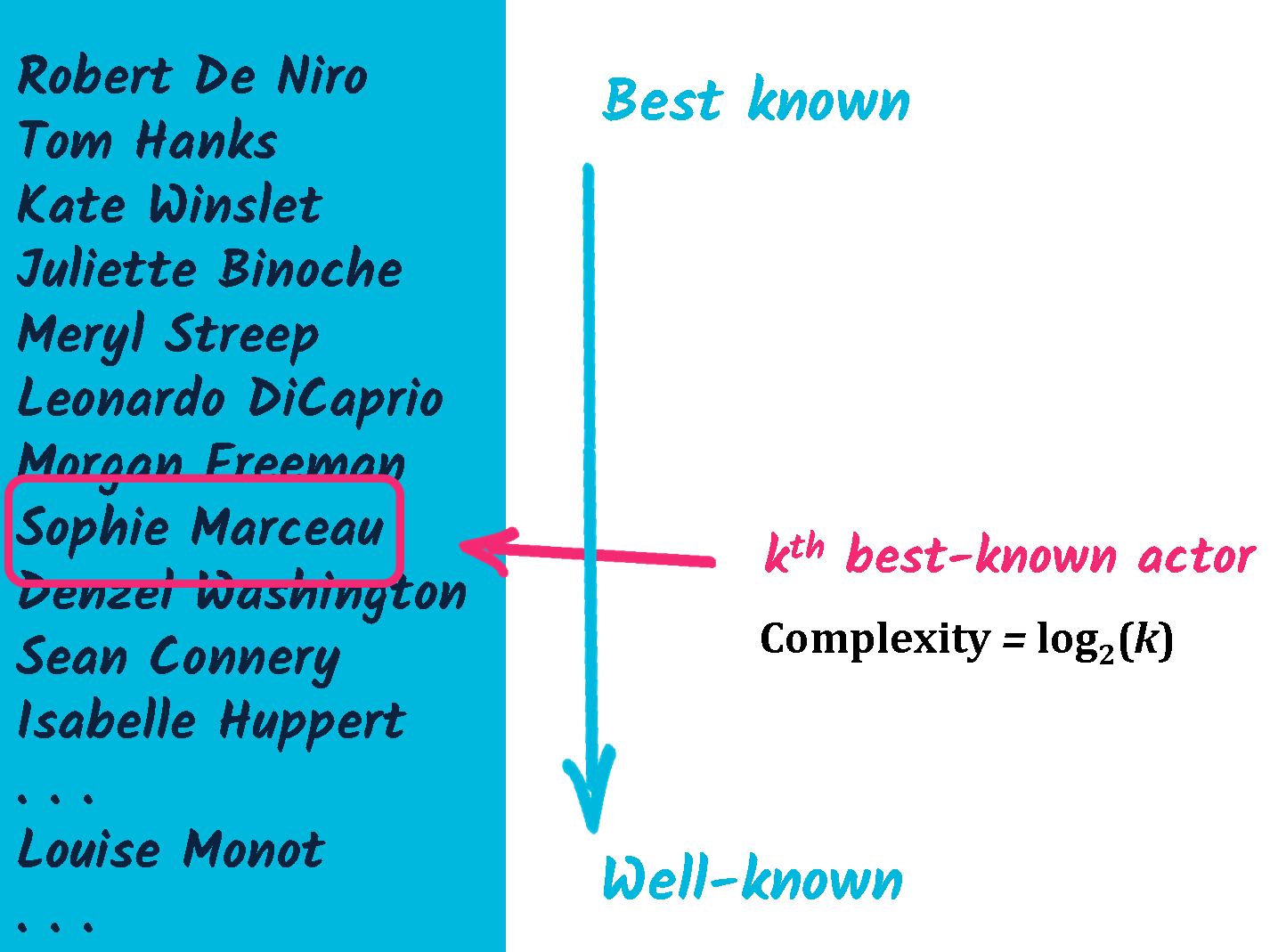

Unexpected encounter

GPT-3 does know that unexpected encounters would be with famous people or acquaintances far away from home.

But it does not know why!

GPT-3 does know that unexpected encounters would be with famous people or acquaintances far away from home.

But it does not know why!

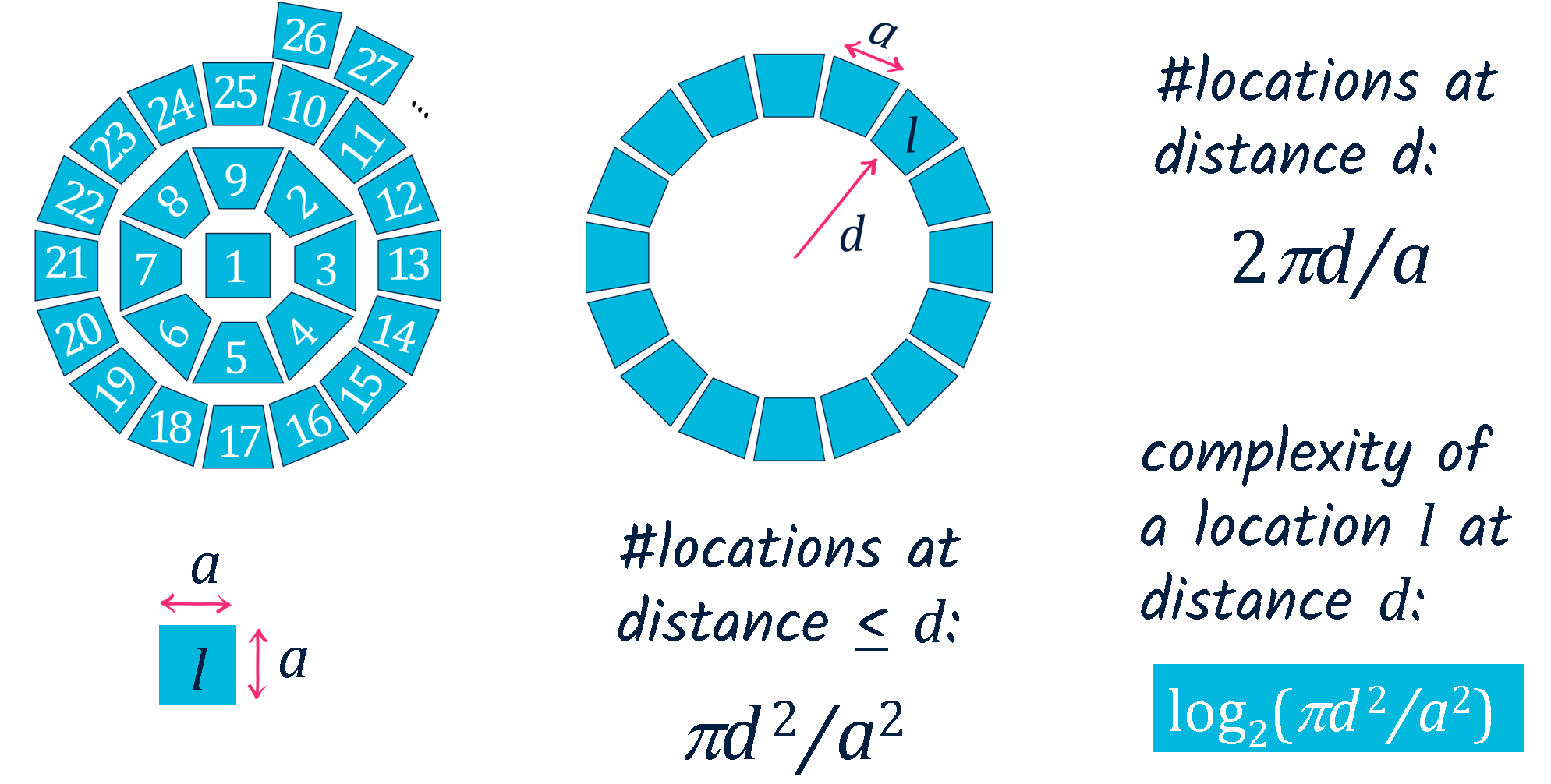

Does AIT know? Yes! Unexpectedness is due to complexity drop: $$U = C_{exp} - C_{obs}$$ In most cases: \(U = C(L) - C(P)\) L: location of the encounter

P: encountered person

Dessalles, J.-L. (2008). Coincidences and the encounter problem: A formal account. CogSci-2008, 2134-2139.

Unexpected encounter

Unexpected encounter

Unexpected encounter

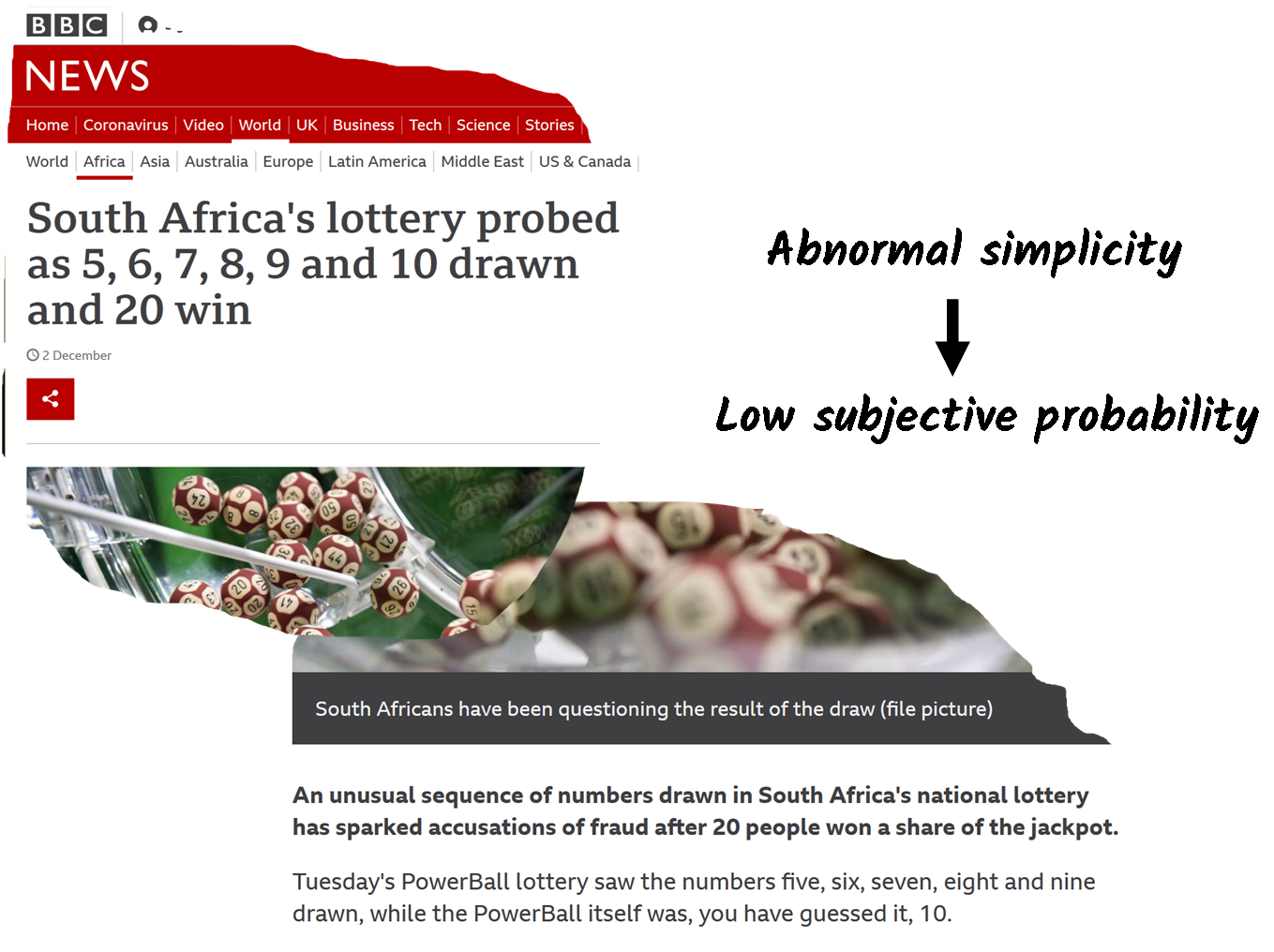

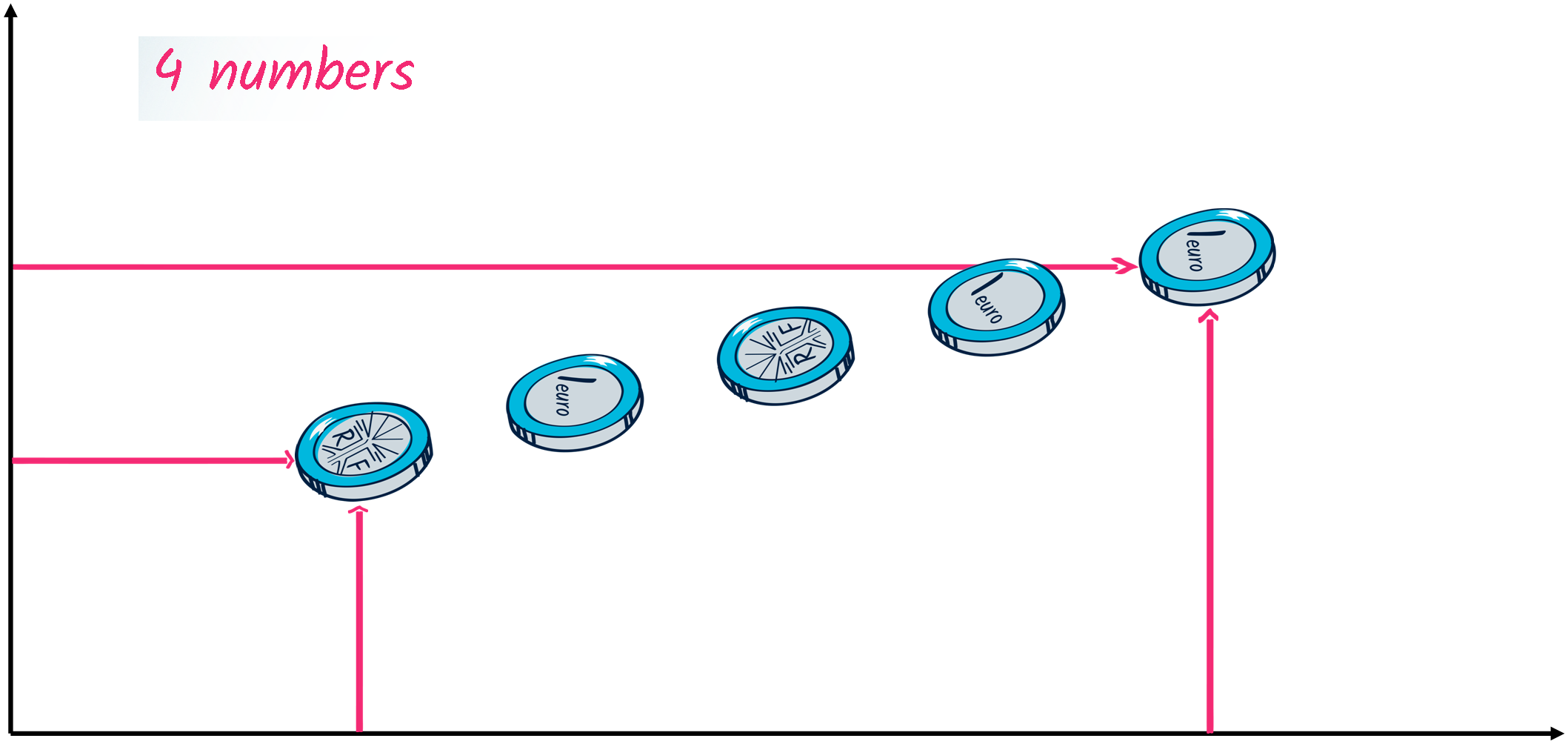

Complexity drop

Expected complexity: ≈ 6 * K(49)

Observed complexity: ≈ K(5) + K(’sequence’)

Expected complexity: ≈ 6 * K(49)

Observed complexity: ≈ K(5) + K(’sequence’)Complexity drop

On September 10th, 2009, the numbers 4, 15, 23, 24, 35, 42 were drawn by a machine live on the Bulgarian television. The event would have gone unnoticed, were it not that the exact same numbers had come up in the preceding round, a few days before.

On September 10th, 2009, the numbers 4, 15, 23, 24, 35, 42 were drawn by a machine live on the Bulgarian television. The event would have gone unnoticed, were it not that the exact same numbers had come up in the preceding round, a few days before.

Complexity drop

Complexity of the lottery round r0 by referring to the preceding one r-1:

C(r0) < C(r-1) + C(r0 | r-1) + O(1).

Since all lottery draws are recorded, r-1 is entirely determined by its rank, 1, in the list of past draws.

In the lottery context, C(r-1) can be as small as about 1 bit: C(r-1) ≈ C(1)

If the two draws contain the same numbers, C(r0 | r-1) = 0.

Complexity of the lottery round r0 by referring to the preceding one r-1:

C(r0) < C(r-1) + C(r0 | r-1) + O(1).

Since all lottery draws are recorded, r-1 is entirely determined by its rank, 1, in the list of past draws.

In the lottery context, C(r-1) can be as small as about 1 bit: C(r-1) ≈ C(1)

If the two draws contain the same numbers, C(r0 | r-1) = 0.

Complexity drop

C(r0) < C(r-1) + C(r0 | r-1) + O(1).

C(r0) < 1 + 0 + O(1)r0 appears utterly simple!

C(r0) < C(r-1) + C(r0 | r-1) + O(1).

C(r0) < 1 + 0 + O(1)r0 appears utterly simple!

This explains why the event is so impressive that it was mentioned in the international news.

Coincidences as complexity drop

"Creepy coincidences" between Abraham Lincoln and John F. Kennedy

"Creepy coincidences" between Abraham Lincoln and John F. Kennedy

Kern, K. & Brown, K. (2001). Using the list of creepy coincidences as an educational opportunity. The history teacher, 34 (4), 531-536.

- "Lincoln was elected to Congress in 1846, Kennedy was elected to Congress in 1946."

- "Lincoln was elected president in 1860, Kennedy was elected president in 1960."

- "Both presidents have been shot in the head on a Friday in presence of their wives."

- "Both successors were named Johnson, born in 1808 and 1908."

- "Kennedy was shot in a car named Lincoln“

- . . .

To be explained:

- Role of close analogy

- Role of round numbers

- Role of prominence

- Role of mere association

Coincidences as complexity drop

$$U(e_1 * e_2) = \color{#F32A73}{C_w(e_1 * e_2)} - \color{#00B8DE}{C(e_1 * e_2)}$$

$$U(e_1 * e_2) \ge \color{#F32A73}{C_w(e_1) + C_w(e_2)} - \color{#00B8DE}{C(e_1) - C(e_2|e_1)}$$

$$U(e_1 * e_2) \ge \color{#F32A73}{C(e_1)} - \color{#00B8DE}{C (e_2|e_1)}$$

Now explained:

$$U(e_1 * e_2) = \color{#F32A73}{C_w(e_1 * e_2)} - \color{#00B8DE}{C(e_1 * e_2)}$$

$$U(e_1 * e_2) \ge \color{#F32A73}{C_w(e_1) + C_w(e_2)} - \color{#00B8DE}{C(e_1) - C(e_2|e_1)}$$

$$U(e_1 * e_2) \ge \color{#F32A73}{C(e_1)} - \color{#00B8DE}{C (e_2|e_1)}$$

Now explained:

- Role of close analogy

- Role of round numbers

- Role of prominence

- Role of mere association

Dessalles, J.-L. (2008). Coincidences and the encounter problem: A formal account. CogSci-2008, 2134-2139.

Simplicity Theory

Simplicity Theory is about complexity drop.

From ST we can derive sub-theories and predictions about:

Simplicity Theory is about complexity drop.

From ST we can derive sub-theories and predictions about:

- The relevance of features

- Anomaly detection

- Interestingness (encounters, coincidences, stories)

- Subjective probability

- and much more...

Complexity drop: 6 numbers, ~ 50 bits

Simplicity Theory

Simplicity Theory is about complexity drop.

From ST we can derive sub-theories and predictions about:

Simplicity Theory is about complexity drop.

From ST we can derive sub-theories and predictions about:

- The relevance of features

- Anomaly detection

- Interestingness (encounters, coincidences, stories)

- Subjective probability

- and much more...

LeCun, Y. (2022). A Path Towards Autonomous Machine Intelligence.

OpenReview.net, Version 0.9.2, 2022-06-27.

LeCun, Y. (2022). A Path Towards Autonomous Machine Intelligence.

OpenReview.net, Version 0.9.2, 2022-06-27.www.lemonde.fr/pixels/article/2019/03/27/yann-lecun-laureat-du-prix-turing-l-intelligence-artificielle-continue-de-faire-des-progres-fulgurants_5441990_4408996.html

- complexity

- simplicity

- compression

- description length

but many mentions of

- (relevant) information

Conclusion

- Bayes’ curse

- Bound to continuous functions

- Isotropic bias

- "Guessing" machines (no understanding)

- off-line (canned expertise)

Conclusion

- (ideal) Minimum Description Length

- Compressionist view on ML

- Universal induction

- Ideal RL (AIXI)

Conclusion

- Analogies

- One-shot induction

- Complexity drop ➜ Simplicity Theory

- relevance

- interestingness

- anomaly detection (abnormal simplicity)

and much more

Conclusion

- MOOC on Algorithmic Information Theory

Dessalles, J.-L. (2021). Understanding Artificial Intelligence through Algorithmic Information Theory (MOOC). EdX.

- Paper on AI’s limits in the light of AIT

Dessalles, J.-L. (2019). From reflex to reflection: Two tricks AI could learn from us. Philosophies, 4 (2), 27.

- Paper on Complexity drop and relevance

More on

www.dessalles.fr

Dessalles, J.-L. (2013). Algorithmic simplicity and relevance. In D. L. Dowe (Ed.), Algorithmic probability and friends - LNAI 7070, 119-130. Berlin, D: Springer Verlag.

- Website of Simplicity Theory

These slides are available on www.dessalles.fr/slideshows