The dataset consists of multimodal recordings of Salsa dancers, captured at different sites with different pieces of equipment. It will be expanding over the next few weeks as new recording sessions are completed and working data files are made available.

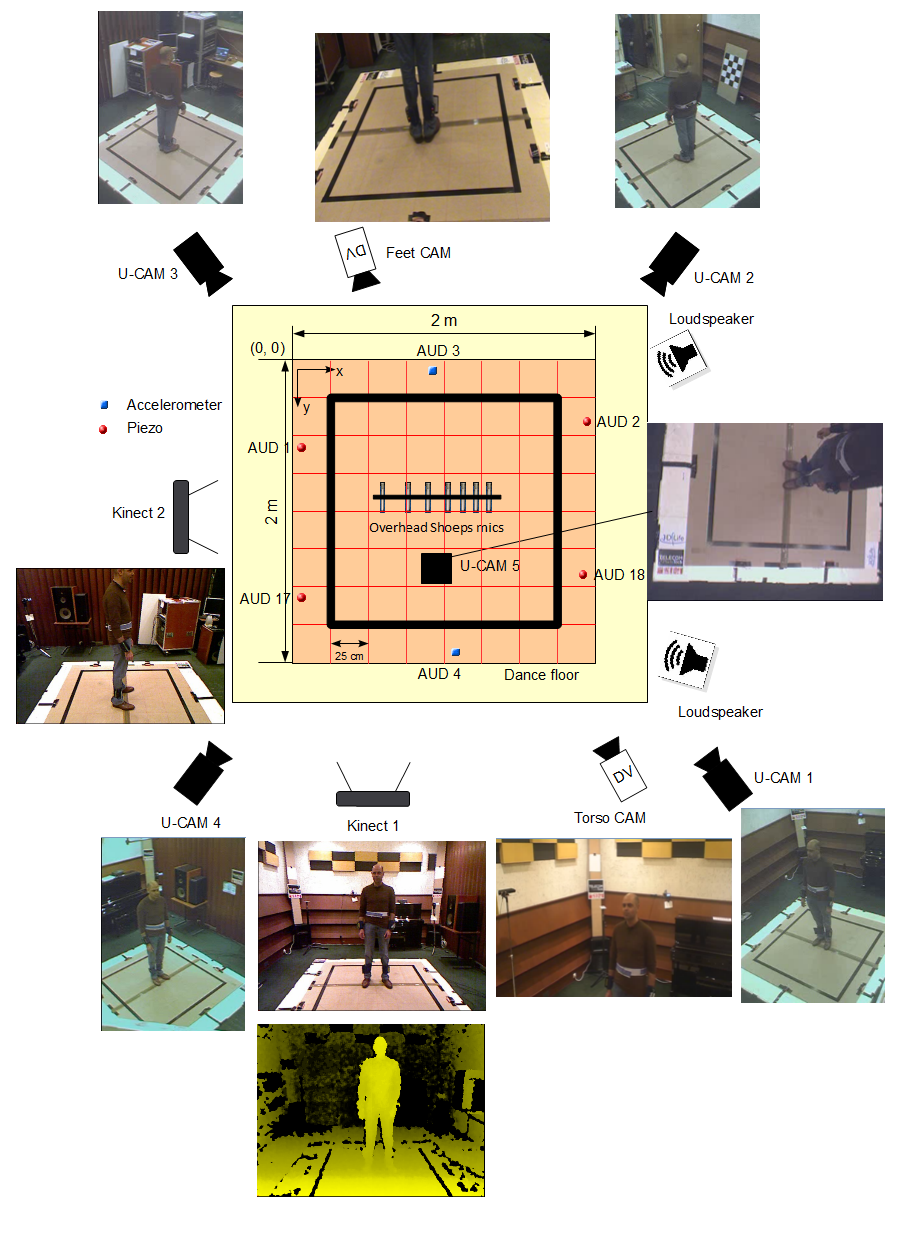

So far 15 dancers, each performing 2 to 5 fixed choreographies, have been captured at Telecom ParisTech recording studio. The data include:

- Synchronised 16-channel audio capture of dancers' step sounds, voice and music;

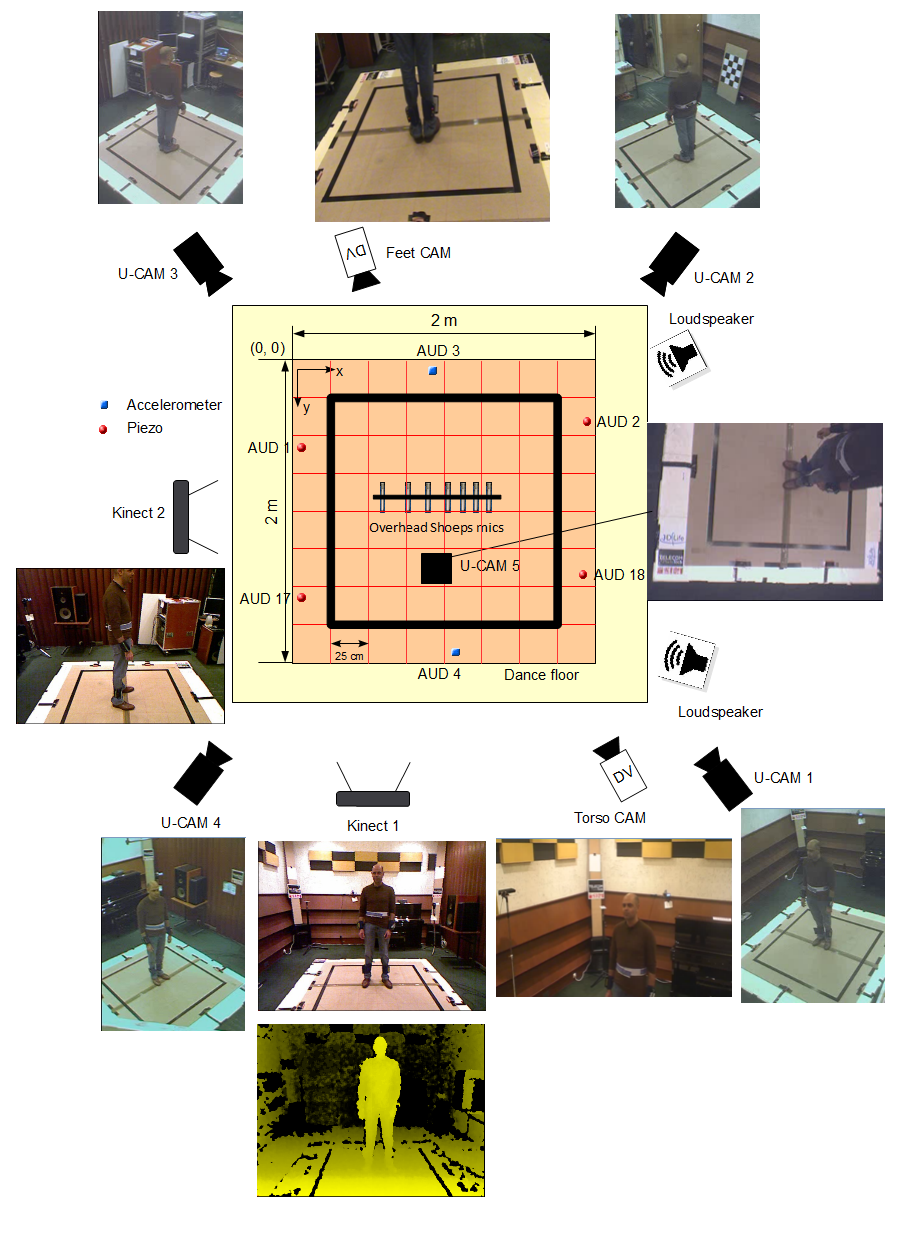

- Synchronised 5-camera video capture of the dancers from multiple viewpoints covering whole body, plus 4 non-synchronised additional video captures: one mini DV camera (with audio) shooting the dancers' feet, a second mini DV camera (with audio) shooting the torso; one Kinect camera covering the whole body from the front, and a second covering the upper-body from the side (see below for more details);

- Inertial (accelerometer + gyroscope + magnometer) sensor data captured from multiple sensors on the dancer's body;

- Depth maps for dancers' performances captured using a Microsoft Kinect;

- Original music excerpts;

- Different types of ground-truth annotations, for instance annotations of the choreographies with reference steps time codes relative to the music and ratings of the dancers' performances (by the Salsa teacher).

The formats of the different streams of data are given in the following table.

|

Sensor data

|

Codec

|

Parameters

|

|

Audio signals

|

PCM WAV

|

Mono, 32 bits, 48000 Hz

|

|

Unibrain cameras (1 to 5)

|

Raw AVI from decompressed MJPEG

|

RGB 24 bits, 320x240, 30 fps

|

|

Mini DV cam. - Feet

|

Video: DV Video

Audio: PCM S16

|

Video: 720x576, 25 fps

Audio: Stereo, 16 bits, 32000 Hz

|

|

Mini DV cam. - Torso

|

Video: DV Video

Audio: PCM S16

|

Video: 720x576, 25 fps

Audio: Stereo, 16 bits, 48000 Hz

|

|

Kinects

|

OpenNI

|

|

|

WIMU signals

|

ASCII

|

|

Recording setup

Audio setup

- 7 Schoeps omni-directional condenser microphones (overhead).

- 1 Sennheiser wireless lapel microphone (dancer's voice).

- Bruel & Kjaer 4374 piezoelectric accelerometers and charge conditioning amplifier unit with two independent input channels

- Four acoustic-guitar internal Piezo transducers

- 2 Echo Audiofire Pre8 firewire digital audio interfaces. Accurate synchronisation between multiple Audiofire Pre8 units is achieved through Word Clock S/PDIF.

- A server based on Debian with real-time patched kernel is used to perform audio playback and recording. This server runs an open-software solution based on Ffado, Jack along with a custom application for batch sound playback and recording.

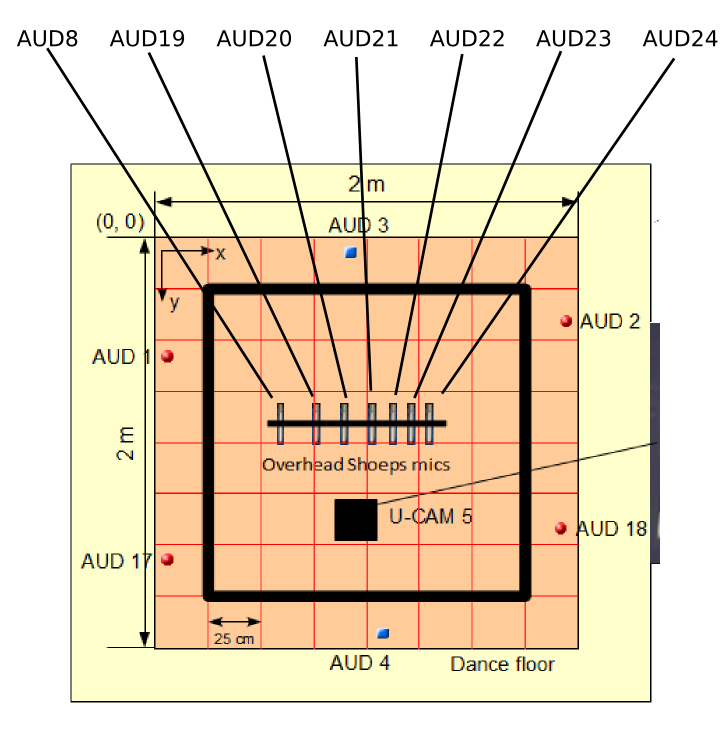

On-floor audio sensor positions are given in the following table.

|

Sensor type

|

Audio channel

|

x, y coordinates in mm

|

|

Piezo

|

1

|

30, 550

|

|

Piezo

|

2 |

1970, 450

|

|

B&K accelerometer

|

3

|

950, 20

|

|

B&K accelerometer

|

4

|

1050, 1990

|

|

Piezo

|

17

|

30, 1560

|

|

Piezo

|

18

|

1970, 1460

|

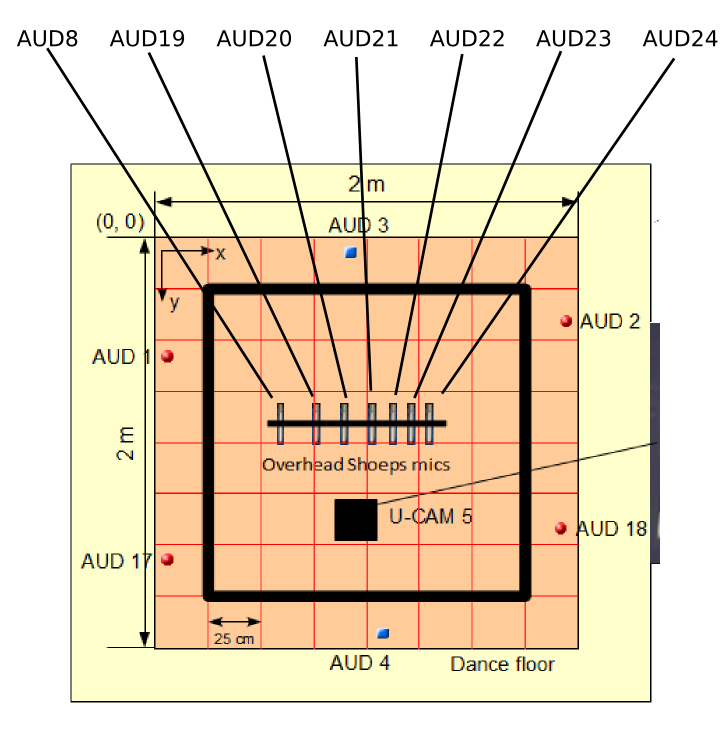

The mapping between Shoeps microphones and audio channels is given in the Figure below. These microphones are logarithmically spaced. From left to right, the distance between:

- Mic 8 and Mic 19: 39.5 cm;

- Mic 19 and Mic 20: 23 cm;

- Mic 20 and Mic 21: 13.5 cm;

- Mic 21 and Mic 22: 8 cm;

- Mic 22 and Mic 23: 4.5 cm;

- Mic 23 and Mic 24: 2.5 cm.

Video setup

The equipment consisted in 5 firewire CCD cameras (Unibrain Fire-i Color Digital Board Cameras), which were connected to a server running the Unibrain software for recording.

Inertial measurement units

Data from inertial measurement units (IMUs - see image below) were also captured with each sequence. Five IMUs were placed on each dancer; one on each dancer's forearm, one on each dancer's ankle, and one above their hips. Each IMU provides time-stamped accelerometer, gyroscope and magnetometer data at their location for the duration of the session.

Music and choreographies

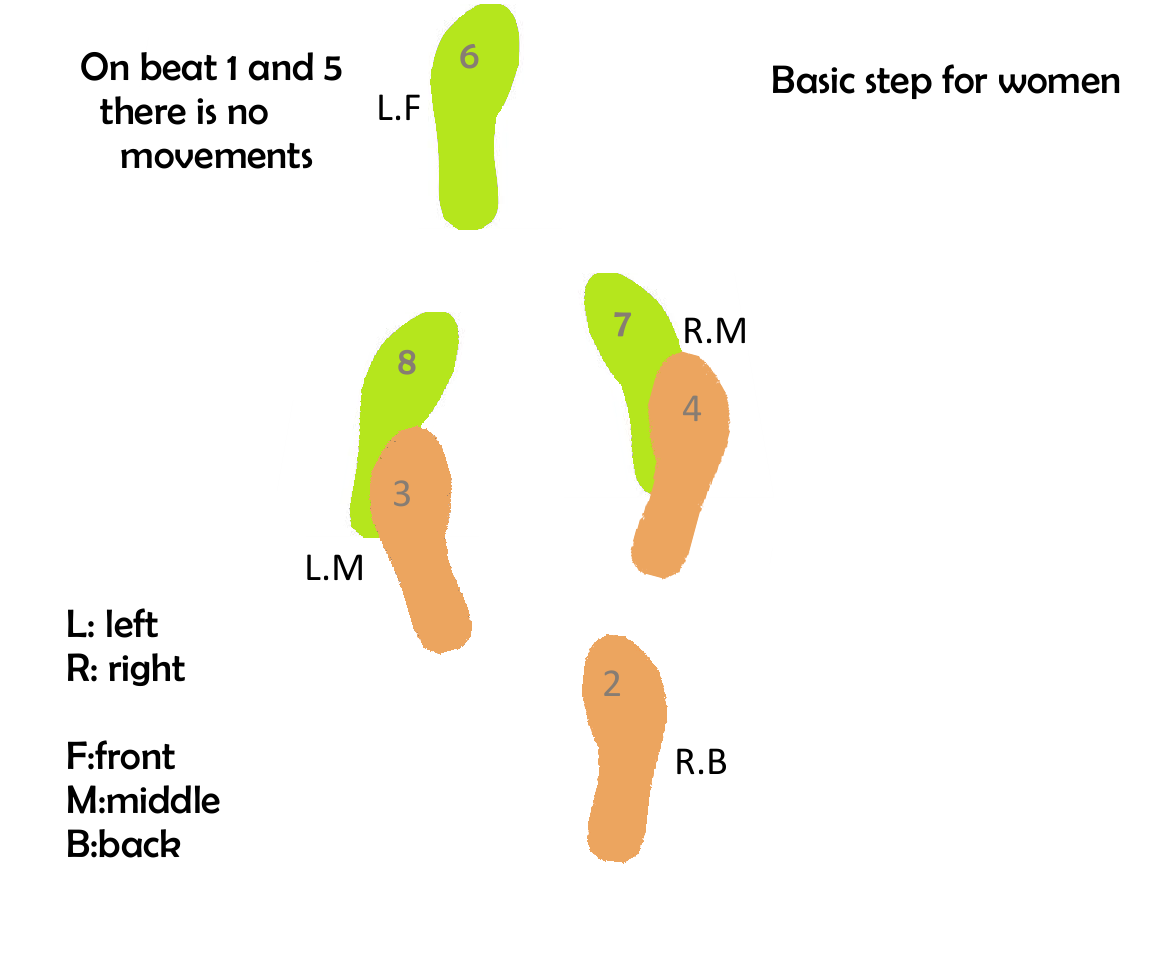

So far 15 dancers have been recorded (6 women and 9 men). Bertrand is considered as the reference dancer for men and Anne-Sophie K. as the reference dancer for women, in the sense that their performances are considered to be the "templates" to be followed by the other dancers.

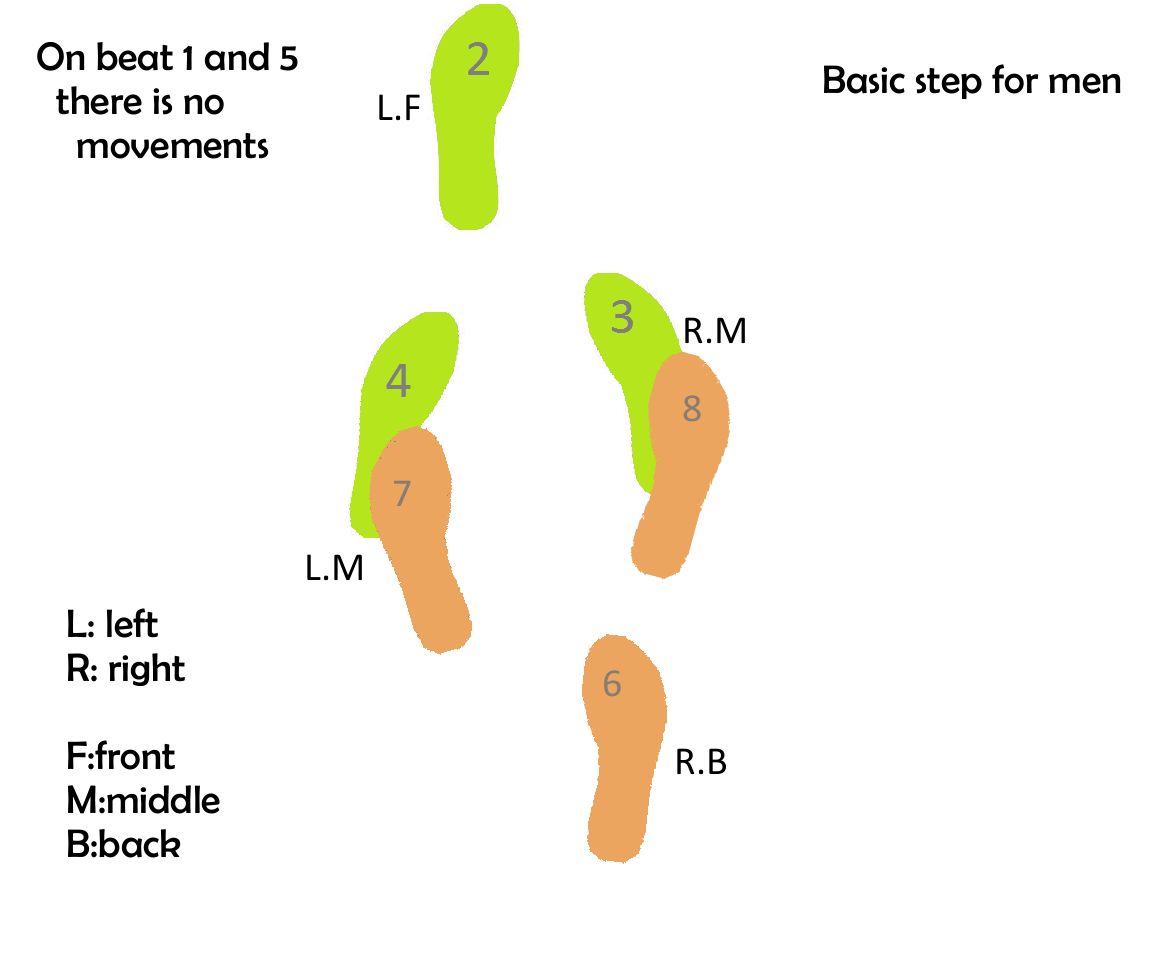

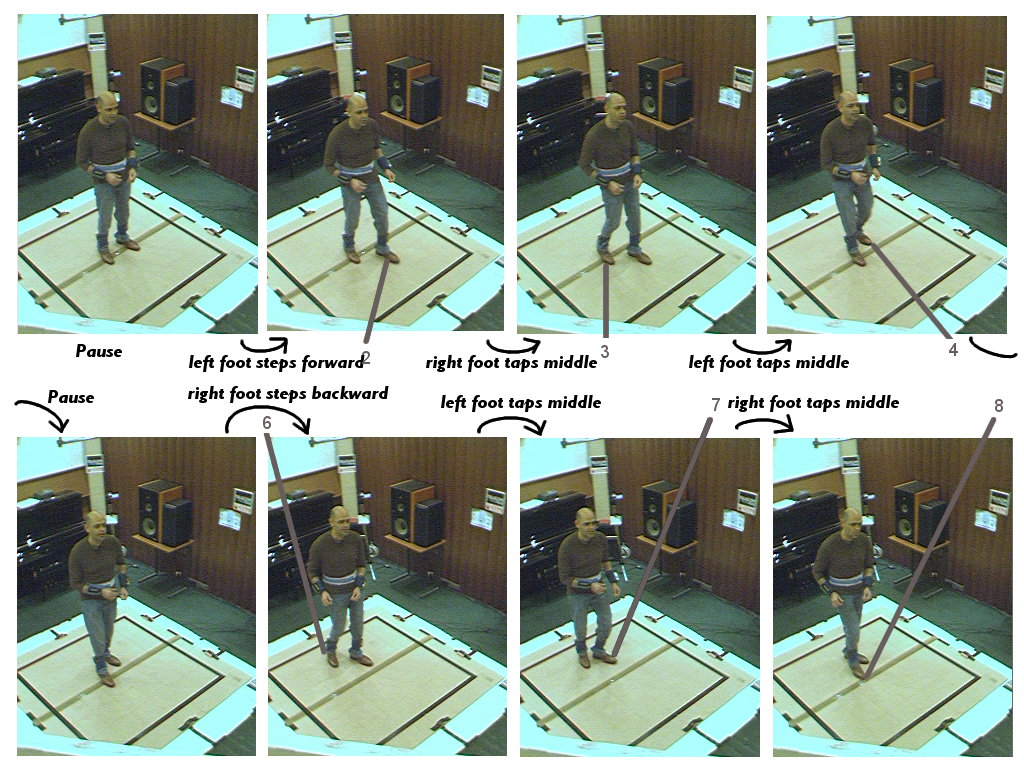

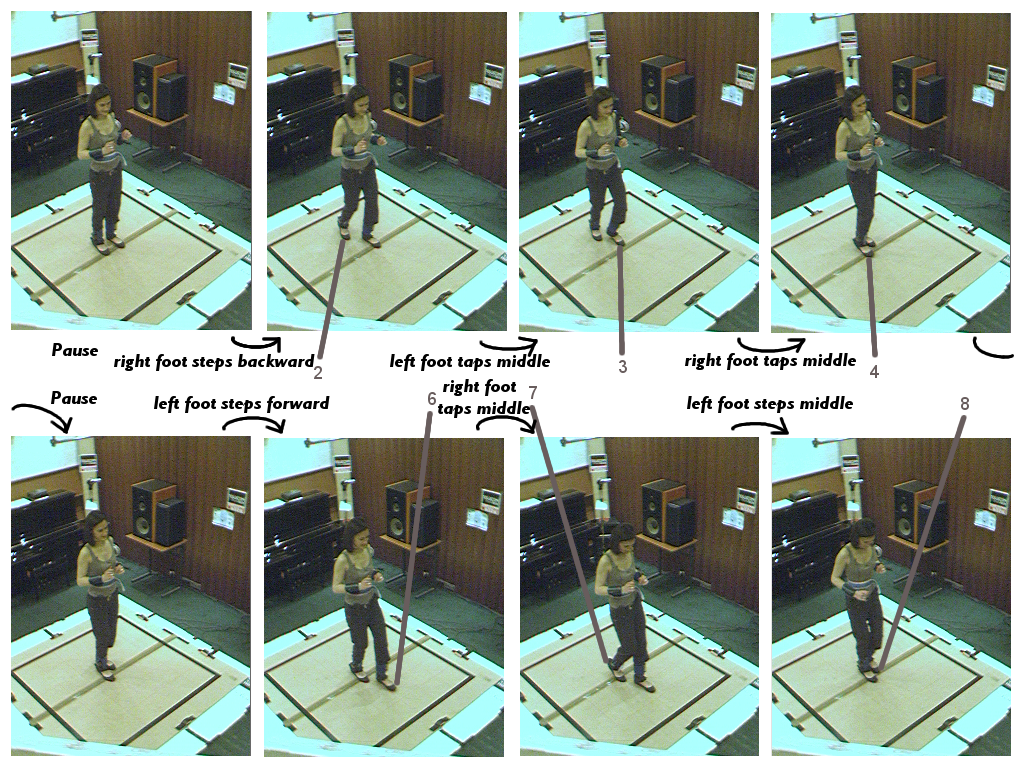

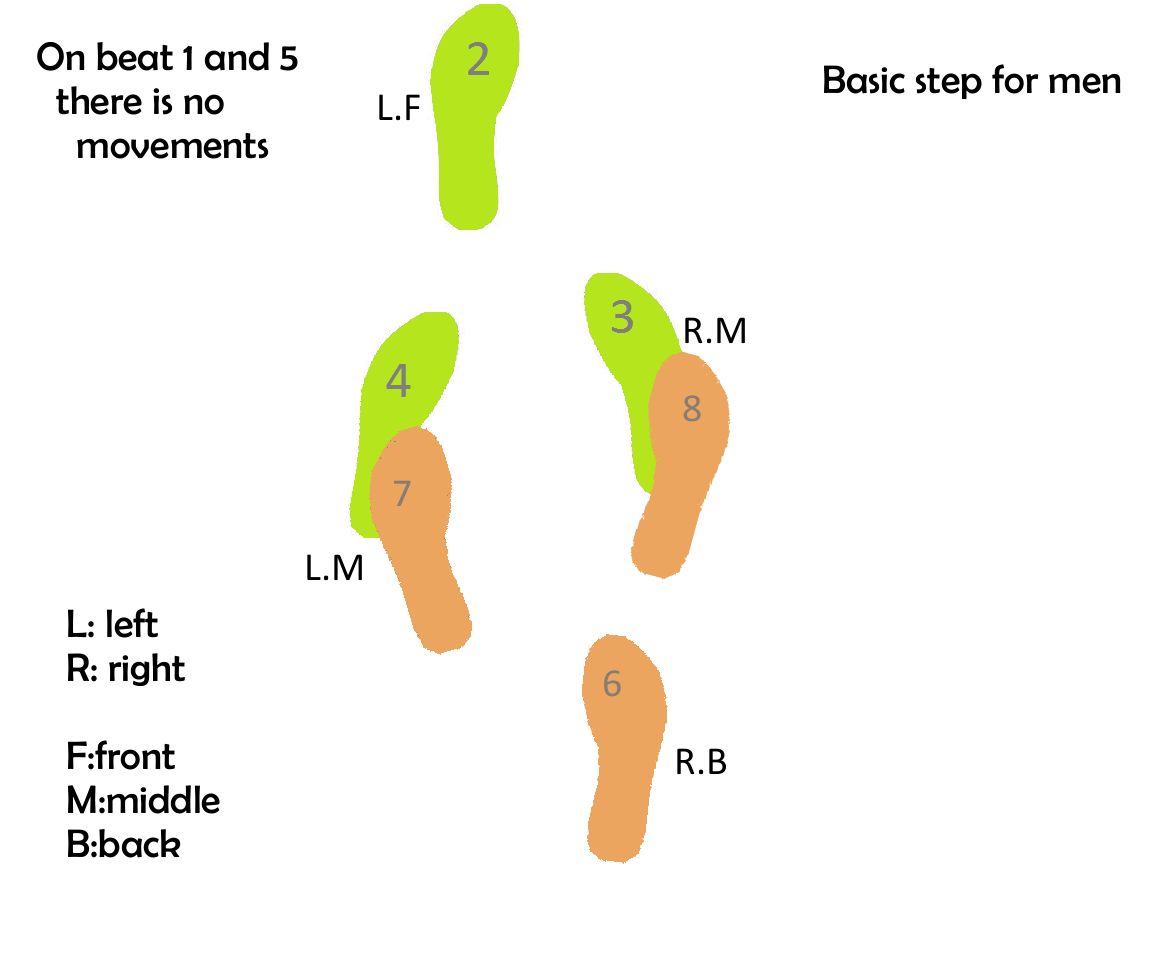

Each dancer performs 2 to 5 solo Salsa choreographies among a set of 5 pre-defined ones roughly described as follows:

- C1: 4 Salsa basic steps (over two 8-beat bars), where no music is played to the dancer, rather, he/she voice-counts the steps: "1, 2, 3, 4, 5, 6, 7, 8, 1, ..., 8" (in French or English).

- C2: 4 basic steps, 1 right turn, 1 cross-body; danced on a Son clave excerpt.

- C3: 5 basic steps, 1 Suzie Q, 1 double-cross, 2 basic steps; danced on Salsa music excerpt labelled C3.

- C4: 4 basic steps, 1 Pachanga tap, 1 basic step, 1 swivel tap, 2 basic steps; danced on Salsa music excerpt labelled C4.

- C5: a special one as it is a solo performance mimicking a duo, in the sense that the girl or the boy is asked to perform alone movements that are supposed to be executed with a partner. The movements are: 2 basic steps, 1 cross-body, 1 girl right turn, 1 boy right turn with hand swapping, 1 girl right turn with a caress, 1 cross-body, 2 basic steps; danced on Salsa music excerpt labelled C5.

Whenever possible a real duo rendering of choreography C5 has been captured. It is referred to as C6 in the data repository.

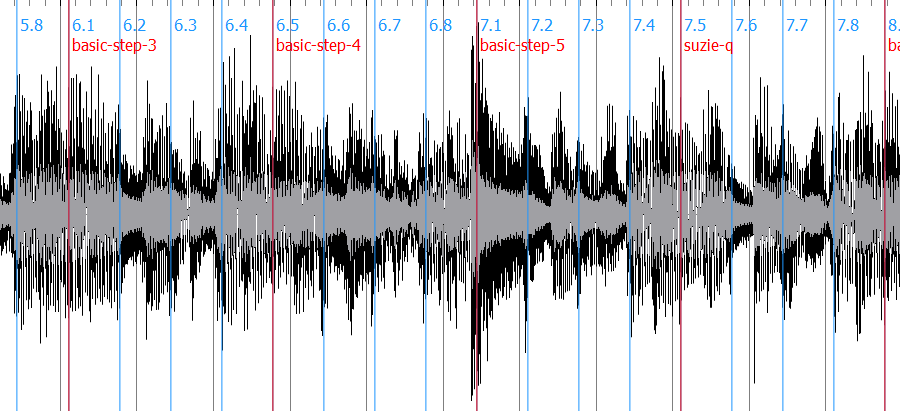

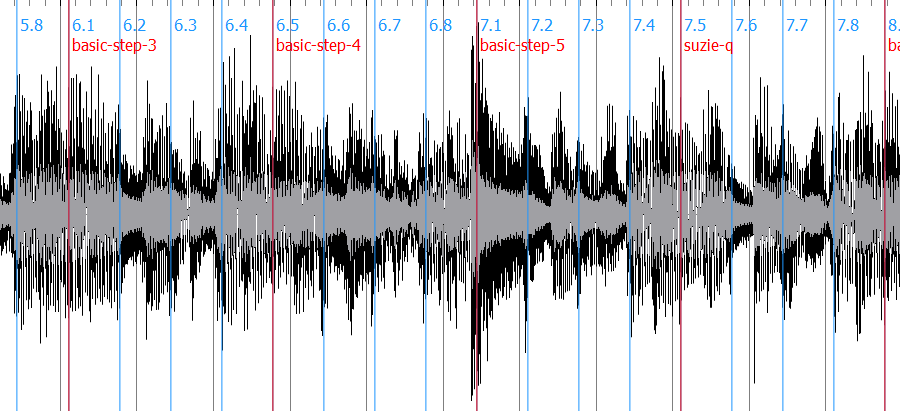

The dancers have been instructed to execute these choreographies respecting the same musical timing, i.e. all dancers are expected to synchronise steps/movements to particular music beats. A manual annotation of the music in terms of dance movement ideal timing is provided along with the original music excerpts. The following figure gives a snapshot of the annotation together with visualisations of the timing of basic steps. It is important to note that the dancers have been asked to perform a Puerto Rican variant of Salsa, and are expected to dance "on two".

Audio excerpt C3 annotation with choreography movements timing (in red) along with bars and beats (in blue)

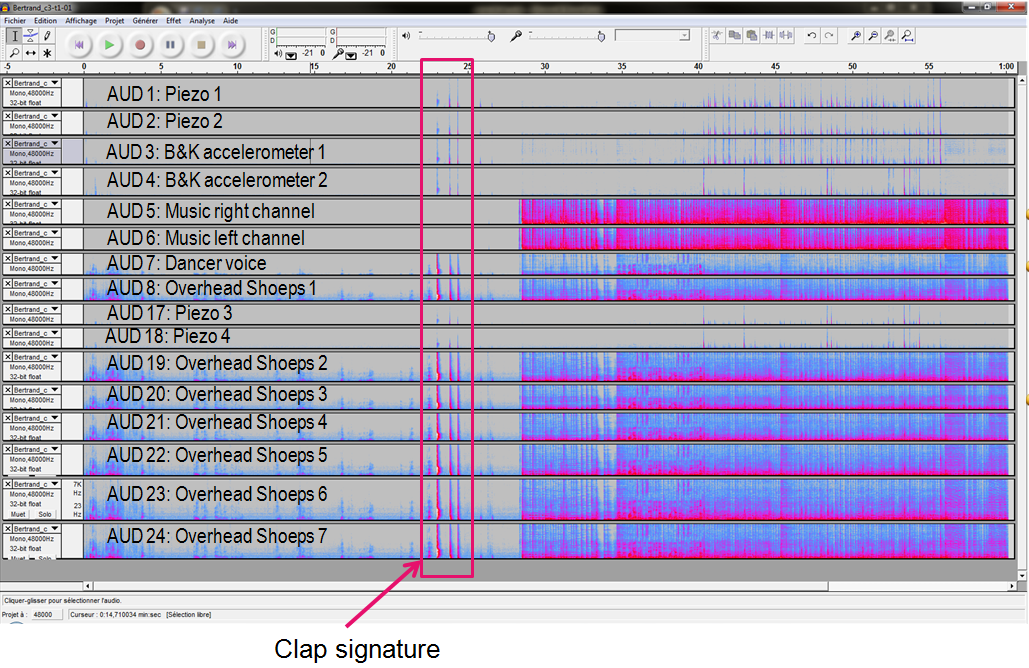

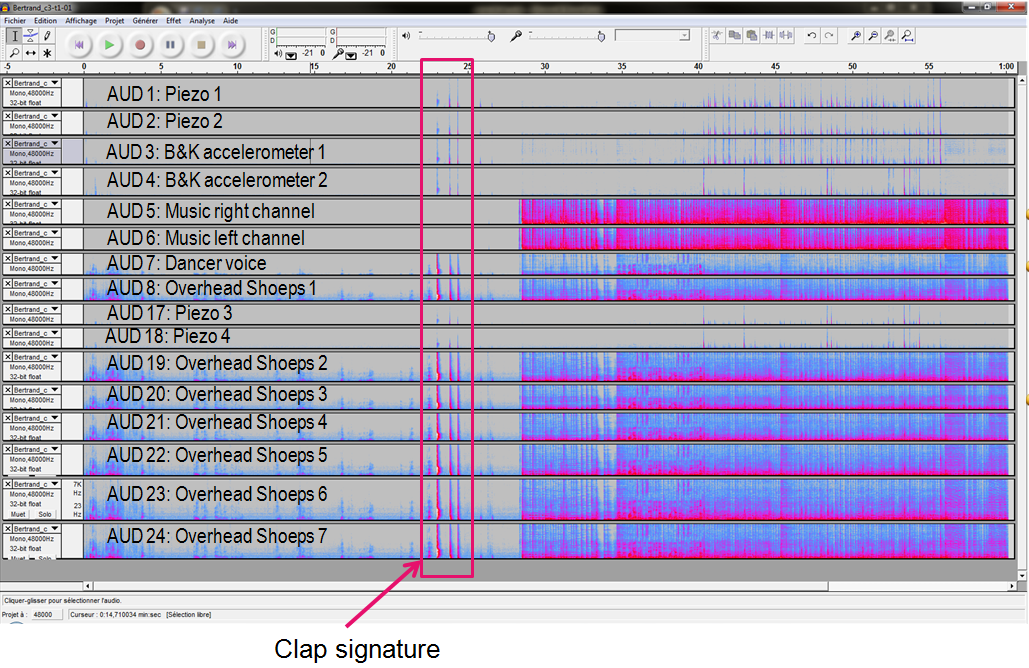

Synchronisation, calibration and ground-truth annotations

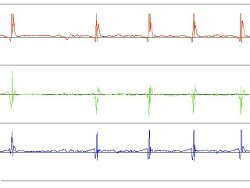

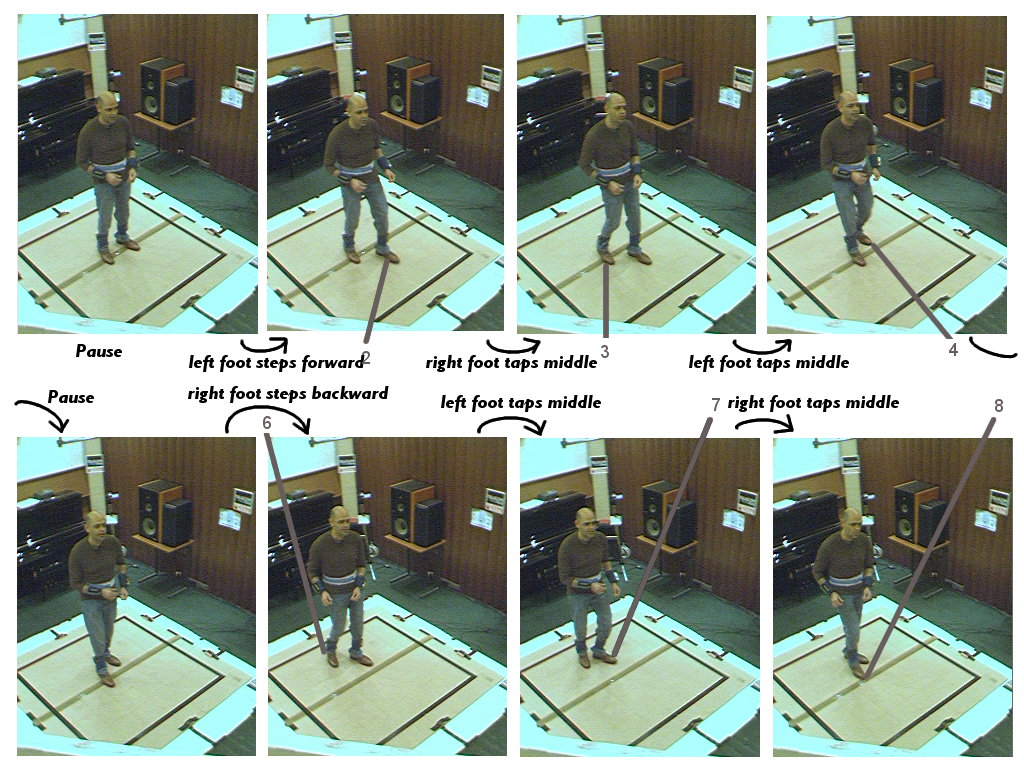

While the signals captured by some subsets of sensors are prefectly synchronised, namely all audio channels (except the audio streams of the mini DV cameras), and the 5 unibrain camera videos, synchronisation is not ensured across all streams of data. To minimise this inconvenience, all dancers were instructed to execute a "clap procedure" before strating their performance, where they successively clap their hands and tap the floor with each foot. Hence, the start time of each data stream can be synchronised (either manually or automatically) by aligning the clap signatures that are clearly visible within a 2-s time window from the beginning of every data stream (see for instance audio clap signatures on audio signals snapshot above or image below).

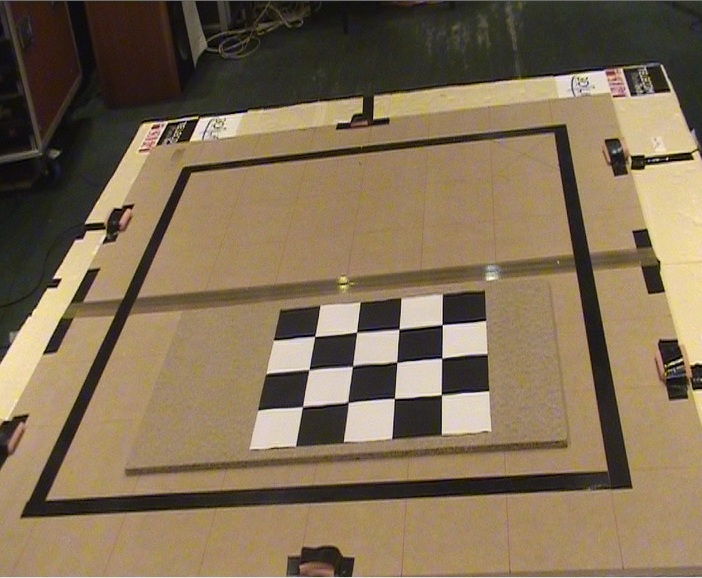

Camera calibration data is provided that consists of images of a calibration shape (see images below).

The ground-truth annotations include:

- Manual annotations of the music in terms of beats, given in Sonic Visualiser (.svl) format and ascii (.cvs) format;

- A manual annotation of the music in terms of dance movement ideal timing, given in Sonic Visualiser (.svl) format and ascii (.cvs) format;

- Ratings of each dancer performance by the teacher Bertrand.