IGD301: VR Puppet locomotion technique

January, 2021

This project consisted in designing, implementing and evaluating a locomotion technique. It was part of the IGD301 class from Institut Polytechnique de Paris, and was supervised by Jan Gugenheimer. You can find here the code and the apk.

My idea is to use the metaphor of the puppet. The user can control a puppet with his fingers, and make it evolve in its environment. Not only is the user the puppeteer, he is also the puppet. He can therefore navigate between two different views, the one of the puppet he embodies, and the one of the puppeteer, outside the scene. The purpose of this outside view is to have a way to escape motion sickness if necessary.

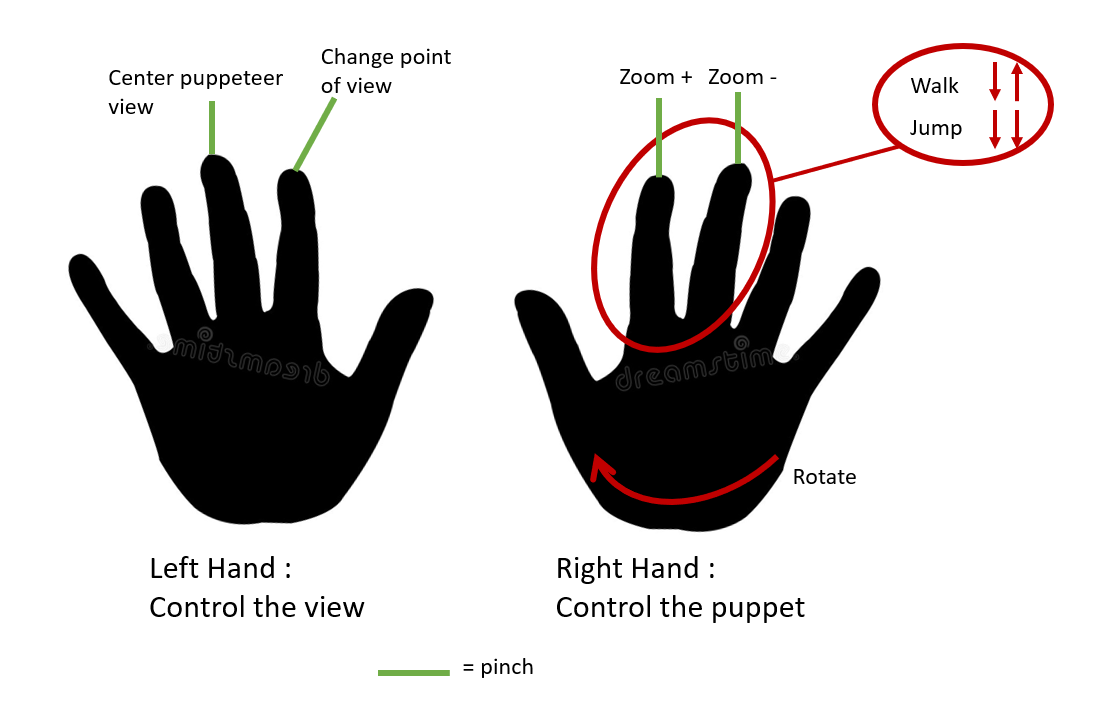

Here is a summary of how this locomotion technique works :

- Move forward : step movement with the right hand (index and middle finger)

- Jump : jumping movement with the right hand (index and middle finger)

- Turn : In puppeteer mode, turn your right hand. In puppeteer mode, turn your head.

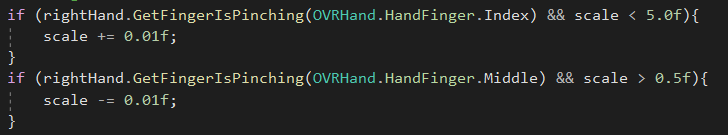

- Increase/Decrease the size of the puppet : Pinch right index/middle finger

- Change point of view : pinch left index finger

- Center point of view : pinch left middle finger

Implementation

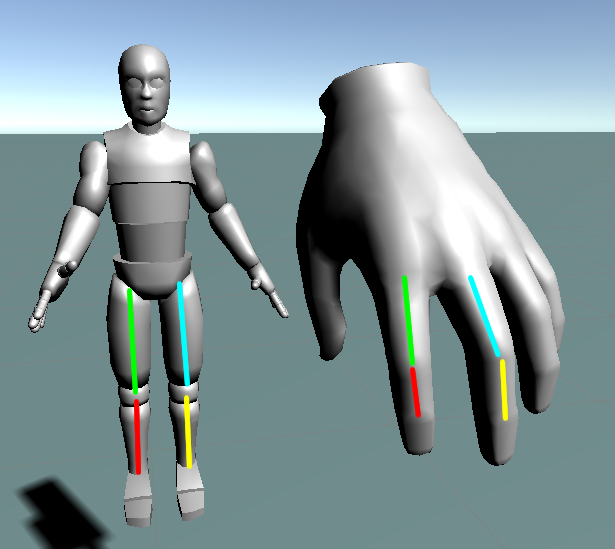

Step 1 : Associate finger movement to the movement of the puppet

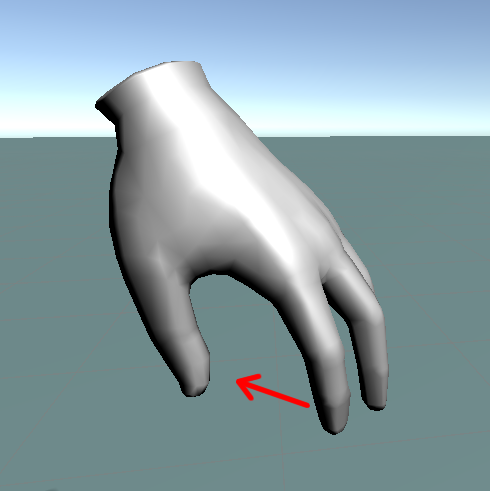

The first step to build this puppet is to associate the movement of the user’s fingers with the movement of the puppet’s legs. The left thigh of the puppet corresponds to the first bone of the index finger, and the left tibia to the second bone of the index finger. The right thigh corresponds to the first bone of the middle finger of the hand, and the right tibia to the second bone of the middle finger.

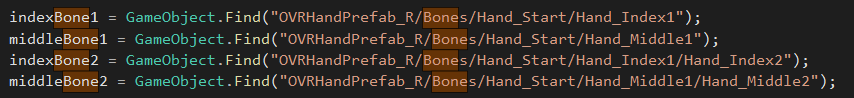

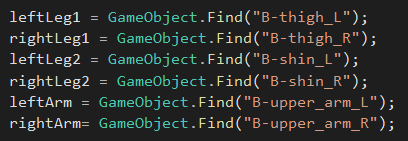

We can get each necessary bone :

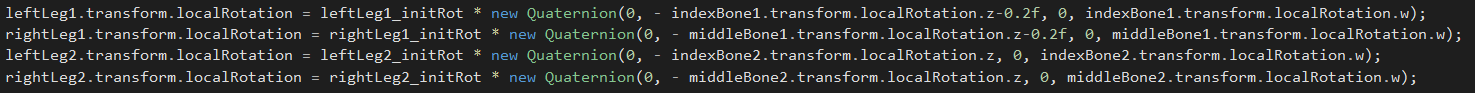

The fingers having the same degrees of freedom as the legs (if we don’t take into account the third bone

of the fingers), we just have to assign the rotations of the hand’s bones to the thigh’s and shin’s rotation

of the puppet.

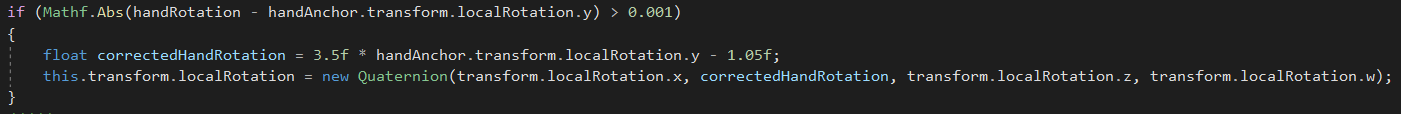

Step 2 : Rotate the puppet

We must now be able to orient the puppet with the hand. We would like to be able to have any orientation possible, so we must be able to rotate the puppet 360°. However, we can’t turn our hand 360°, so we have to amplify this movement. We calculate the new rotation of the puppet as a function of the rotation of the hand, so that is can turn 360°.

3.5 and 1.05 are values I found from the minimum and maximum hand rotation. These values may need to be ajusted. This rotation is only performed if the hand has rotated significantly enough (here I put a 0.001 threshold on the rotation of the hand) to avoid inducing unwanted rotations, due to a slight hand shaking for example. We obtain the following result :

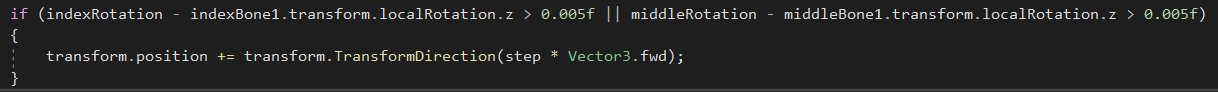

Step 3 : Move the puppet forward

The next step is to move the puppet forward when the user makes a walking movement. The idea is simple : each time one of the two fingers makes a movement from front to back, we consider that it’s a movement corresponding to a step, and we then move the puppet by a certain step.

A threshold is added on the detection of this movement (here, a rotation difference of at least 0.005 is needed), to avoid a slight shaking of a finger being considered as a step movement. Without realizing it, when we move our fingers, we also slightly turn our hand. It is therefore necessary that the rotation of the puppet is only taken into account when there is no walking movement detected. Otherwise, there are too many involuntary rotations of the puppet. The “step” value is determined empirically. It must be large enough to feel like the puppet is pushing on its foot to move forward, but not too large so we don’t have the feeling that the puppet slips on the floor. If the user makes a slow movement, the puppet will move slowly, and vice versa, which is quite intuitive to control the speed of the puppet :

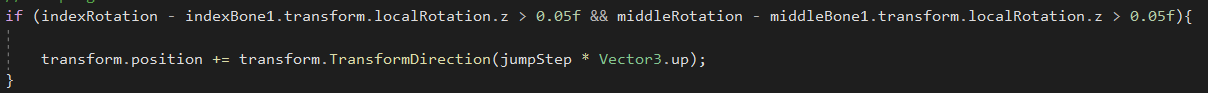

Step 4 : Jump

In the same way, we can also add a jumping movement when the two fingers make a movement from front to back simultaneously. The puppet then falls down thanks to gravity.

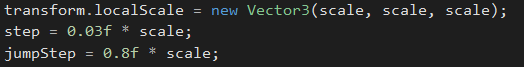

Step 5 : Enlarge the puppet

One way to control the speed of the puppet is to change its size. The idea is that a giant takes bigger steps, and moves faster than a small puppet. The user can modify the size of the puppet by making a pinch gesture.

I added a limit so that the user doesn’t cheat by choosing a puppet as large as the world for example, and to try to keep it realistic. The size of the step and the jump depend on the chosen size.

Step 6 : Change point of view

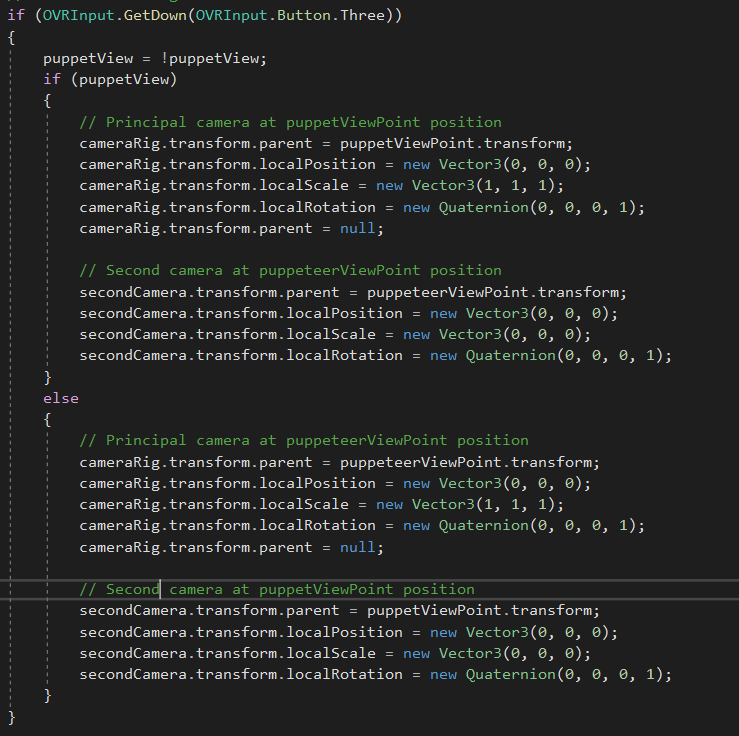

For now, we have worked with an external viewpoint (the puppeteer’s point of view), but we would like to be able to change with the point of view of the puppet. To do so, I attached two empty Game Objects to the puppet : PuppetViewPoint which is located at the puppet’s eye level, and PuppeteerViewPoint which is located far from the puppet. When the user does a pinch with the left index finger, the point of view changes. We therefore place the main camera in the corresponding GameObject, PuppetViewPoint of PuppeteerViewPoint. A second view, seen by secondCamera, allows the user to see the puppet view if you are in puppeteer mode and vice versa. The view from this camera is rendered in a Render Texture, displayed in the canvas.

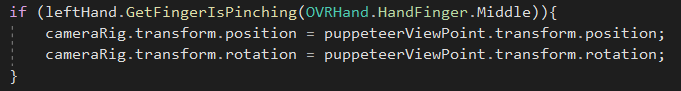

When the user is in puppeteer mode, the view is fixed (the camera doesn’t follow the puppet). A pinch with the left middle finger allows the user to refocus the view on the puppet. Maintaining this pinch allows to have a constant focus on the puppet (the camera follows the puppet), so it’s easier to control the rotations, but it might induce motion sickness, what this external view wants to avoid.

When the user is in puppet mode, the rotation of the puppet is not done with the hand. Indeed, since our head is supposed to be the head of the puppet, it’s more logical and intuitive to turn the puppet when you turn your head. It’s much more intuitive, but the problem is that it forces the user to be standing in order to be able to do 360° rotations. I didn’t dig this issue, but we could for example amplify the movement of the head, so that a real movement of 90° corresponds to a virtual 180° movement. I imagine this would induce motion sickness.

Here is the result showing each point of view :

Evaluation

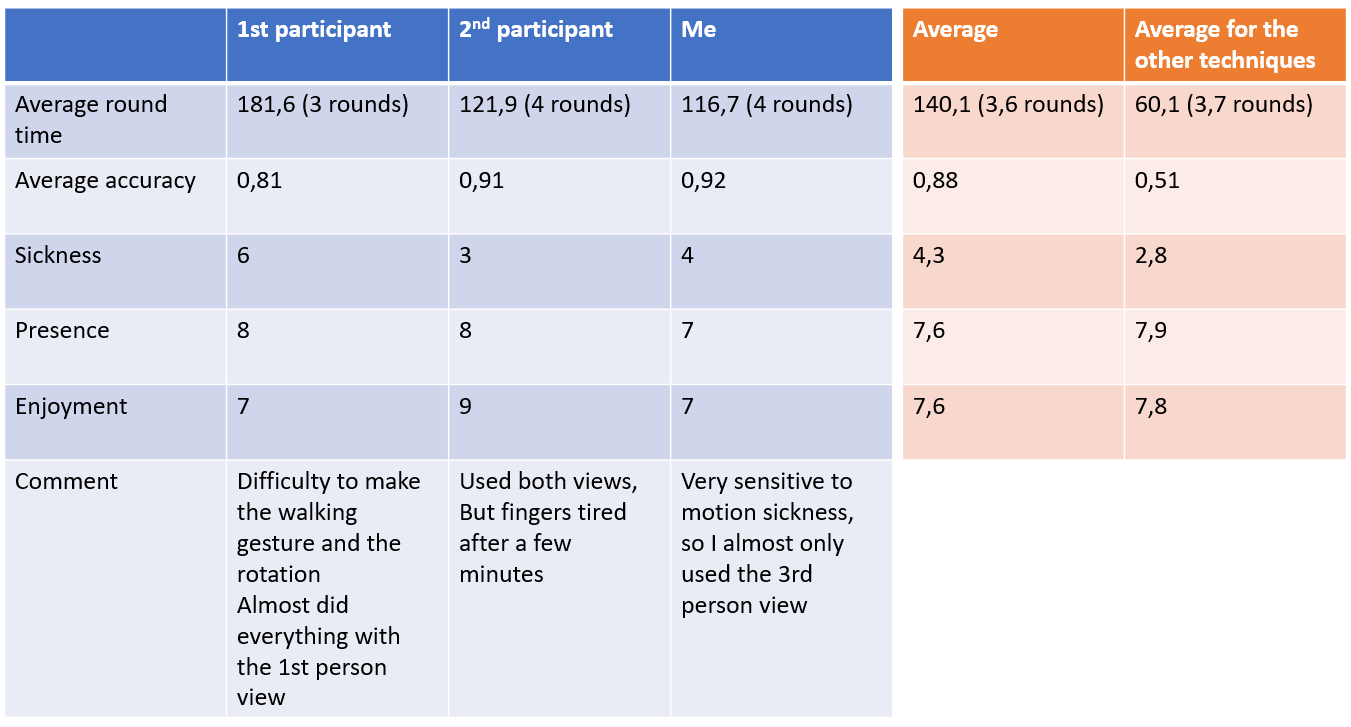

To evaluate our locomotion technique, we conducted a small user study, with 3 measurements: ours, and that of two other users. We all had the same parkour to test our locomotion technique. The rounds are timed, and the user must collect coins on the way. As we all did a different locomotion technique, we can compare our results. The variables we measure are:

- The average time per round

- The average accuracy per round (average of picked up coins)

- The average amount of finished rounds

- Simulator Sickness using the following single item question : “On a scale from 1 to 10, how much motion sickness do you perceive right now ?”

- Presence using the following single item question : “On a scale from 1 to 10, how present did you feel in the virtual world ?”

- Enjoyment using : “On a scale from 1 to 10, how much fun did you have during the task ?”

The evaluation procedure is the following:

- First, welcome the participant and explain him the technique of locomotion

- Let the user 2 minutes to practice

- Expose the user to the parkour during 10 minutes, with the instruction to be as fast and accurate as possible. If we exposed the user for less than 10 minutes, we would not be able to measure simulator sickness, as it takes some exposure time to feel the effects.

- Give the user a questionnaire

- Ask him for open comments.

Here are the results :

This locomotion technique is more efficient than the others to have good accuracy, but is much slower.

The conclusion I make from the evaluation is that the 3rd person point of view is quite efficient to avoid motion sickness, but in this view it is more difficult to control the direction of the puppet. It’s easier to orient the puppet with the head than with the hand. Personally I used principally the external person point of view because it’s easier for me to use it as I had a lot of exposure to it. But for example, the first participant couldn’t control it and used principally the 1st person point of view. The second thing is that I thought that the walking gesture was easy to do, but some people struggle to do it and it’s not natural for them, I didn’t really think to that at first. The second participant was able to do the movement, but his fingers were tired after a few minutes. The first goal was to have something fun to use, and I think it is. It’s quite challenging too. The second goal was to have a way to escape motion sickness with the 3rd person point of view, but only trained people manage to use it. This technique is more suitable for small movements which do not require speed.